Serverless and event-driven design thinking

In this post we will dig deep into how I design event-driven applications and services. What are the steps I normally do during the design. What events do we have? What services should we use? How do we tie everything together. We will round it up by looking at some AWS services commonly used and some best practices.

During the post we will use a fictive service, an event-driven file-manager, that can serve as our example. First let's take a look at what is an event-driven architecture or application?

What is event-driven architecture

Event-driven architecture is a system of loosely-coupled services that handle events asynchronously.

Many has worked with a system where a client calls a backend service over a RESTful API, the backend service then calls a different service, once again over a RESTful API or over RPC. This is a classic request-response pattern. This is a synchronous flow where the client expect a response back. This flow is very easy to implement as the client expects a response to the request. However this synchronous communication can reduce the performance of the application. Also if one of downstream services are experiencing problems that will directly affect the calling service, reducing it's overall up-time.

With asynchronous pattern these problems are smaller. Services still communicate but doesn't expect an immediate response to the request. The service can continue to carry out other work as it wait for the response. This however introduce complexity when it come to debugging and tracing.

In an event-driven architecture we use events, or messages, to communicate. Normally we have two types of messages, commands and events. What is the difference?

Commands vs Events

What both have in common are that they are messages, in json format, immutable, and observable. The main difference is that a command represent an intention with an target audience, like CreateUser. Events are in the past, they are facts, that something has happened, like UserCreated.

Commands can come from clients over an a Restful API, where the target service then consume the command asynchronous and run in the background, when the command has been handled an event can be sent to inform the client, and other services, about that.

Events are consumed asynchronous in response to a change in the system. We typically send events via queues, like SQS, or event-brokers, like EventBridge.

Event producers and consumers

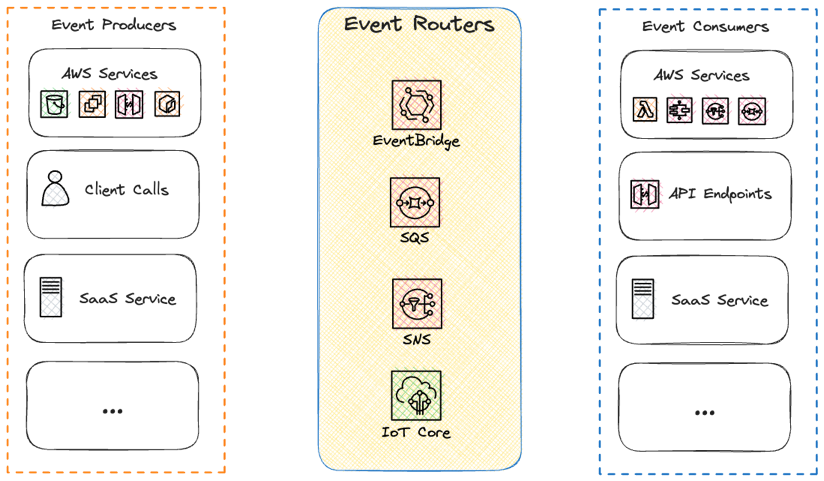

Looking at producers, consumers, and routers.

Event Producers are a system or a service that create and publish events and commands. AWS services, clients, Saas applications and more can be a producer.

Event Router this is a service or system that routes events and commands to consumers. This can be queues, event brokers, etc. There are several AWS services that can act as message router, such as SQS, SNS, IoT Core, and EventBridge.

Event Consumer are the system or service that react on, consume, specific events or commands and carry out work accordingly. Our consumers can be services implemented with AWS services, it can be other SaaS services, API Endpoints, and other.

One of the key aspects of an event-driven architecture is that producers and consumers are completely decoupled.

Benefits with event-driven architecture

There are many benefits of event-driven architectures. They bring team autonomy out of the box, scalability, real-time responsiveness, which is really important in an IoT system.

I would say that the number one benefit is the flexibility and resiliency an event-driven design brings to the table. As long as we keep backwards compatibility, don't remove fields from our events or change data-types, consumers will not be impacted by producer changes. We can add new consumers in an easy and flexible manner.

With that inroduction to event-driven architecture let's return to our fictive system and start designing that.

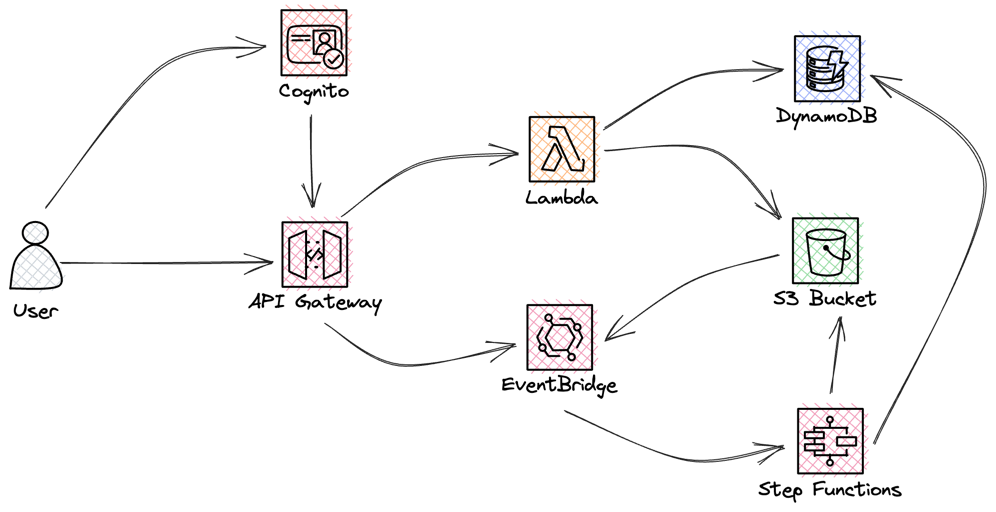

File manager service overview

First of all I start with creating the architecture overview, this might not be the final design but I need a overview. In the case of our file-manager we need an API Gateway that the user can interact with to create, list, read, and delete files. An Amazon Cognito User Pool is probably needed for authentication and authorization. Files will be stored in S3 and DynamoDB will be used as a file index. Since I'm planning a event-driven architecture EventBridge is needed there as well.

Next step would be to sort out what commands and events we have in the service.

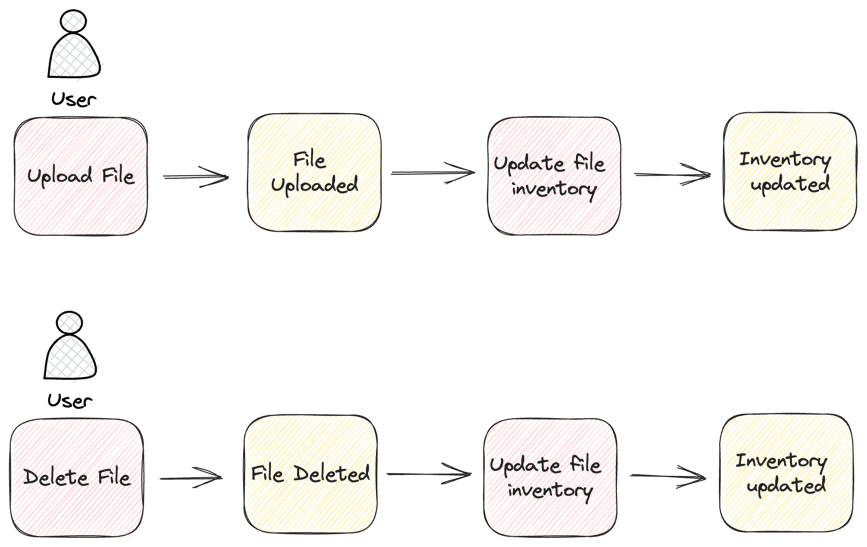

Event Storming and design

I would do a lite version of EventStorming often with one or two people from the team, where we discuss commands, events, etc. We would end up with simple drawings like this.

But it doesn't end there. With this overview done, I can start design how the commands and events are going to be consumed. What need to be done synchronous with a result back to the user and what can be done asynchronous, this is mostly related to commands. For events the consumption model can be designed. Is a queue needed? Can the compute be invoked directly from the event-broker? Questions like that need an answer. At the same time I also create the actual cloud design and how services should interact.

So if we now return to our file-manager and use what is learned during event-storming and the overview that was initially created, a more detailed design can be created.

Cloud design

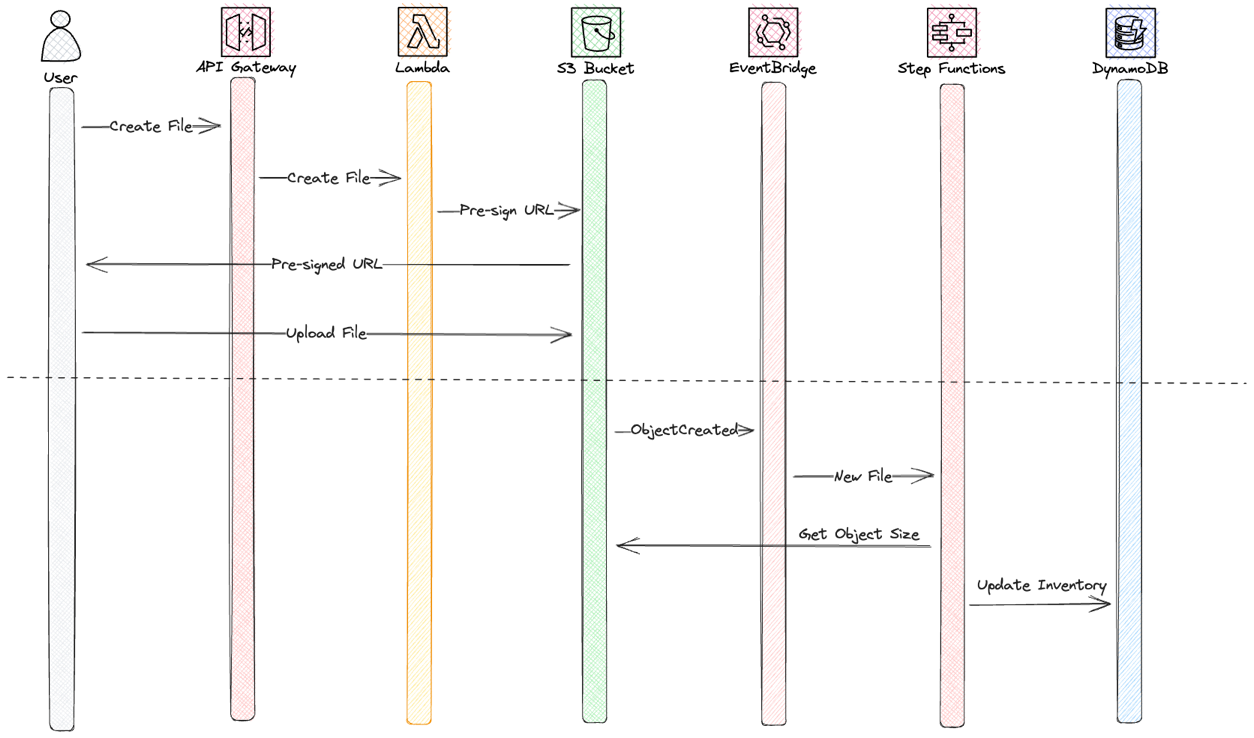

With the basic event-storming done I can now start creating a detailed cloud design, exact what services to use and how they should be integrated. I will create a flow chart for each interaction / event that was identified in the event-storming process.

The flow chart will show the AWS Service used and what action should be taken. Now let's do the design for the user interaction to create a new file.

The first part, above the dashed line, is a synchronous workflow where the user will create a new file and upload it. The second part will be run as asynchronous workflow, where the file inventory is updated. There are several services being used.

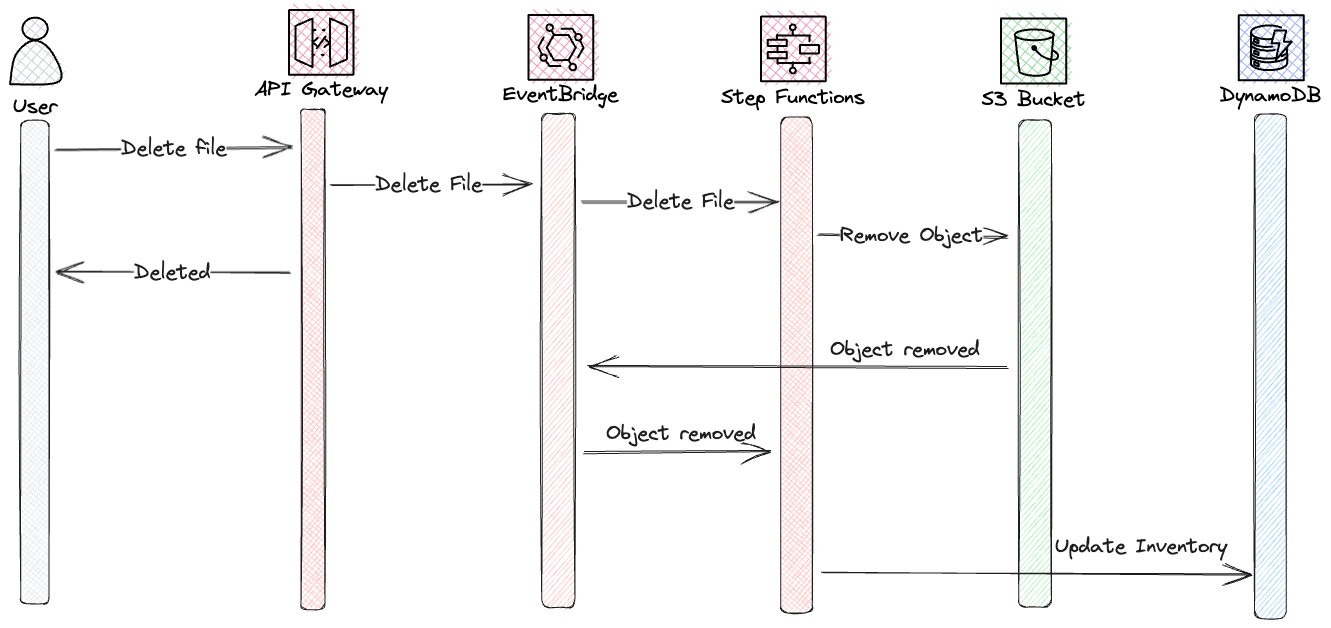

Now let's have a look at the flow for deleting a file.

In this case I use a asynchronous workflow. I'll integrate API Gateway directly to EventBridge and add an event for file deletion that can run in the background. There is no need for the user to know exactly when the process is completed. API Gateway will return status 200, OK when the event is on the event-bus. This is a good example of the storage first pattern, where we integrate AWS managed services directly and add the event to a persistent service, in this case EventBridge.

I would the repeat the above design process for each action, tehre is no need to go into every action in this post. I think you all get the process.

Best practices

With the design completed, let's go into some of the best practices I have learned over the years.

Use storage-first pattern

Always aim to use the storage-first pattern. This will ensure that data and messages are not lost. You can read more about storage-first in my post Serverless patterns

Use JSON

Always create your commands and events in JSON. Why? JSON is widely supported by AWS services, SQS, SNS, EventBridge can apply powerful logic based on JSON formatted data. Don't use yaml, Protobuf, xml, or any other format.

Event Envelope

Always use an event envelope, this is a wrapper around your original event. The pattern I commonly use is the metadata - data pattern. In the data key we keep the event information, the original event. In the metadata we can add information about the event it self, like tracing id, event versions, and more.

{

"metadata" : {

"EventVersion": 2,

"TraceId": "uuid",

"...": "..."

},

"data": {

"Action": "FileDeleted",

"FilePath": "....",

"...": "..."

}

}

Backward compatibility

I have already touch on this in previous chapters. Never introduce breaking changes to your events, don't change data formats, don't remove fields, actions like this will guaranteed break consumers. So always keep backward compatibility.

Event versioning

If you need to introduce breaking changes make sure that you are using event versions. That however don't mean that your producer can just upgrade the version and send only the latest version. You must keep sending all all versions that are in use, in case of breaking changes between versions.

Default EventBridge Bus

Even though there is a default EventBus in every Region in every AWS account, I strongly advise against using it for your custom events. AWS services, like S3, EC2, etc posy their events on the default bus. Let the AWS have that bus to them self and create custom event buses for your own applications. This gives you all the control needed. So leave the default bus alone.

Final Words

I hope you had as much fun reading this post as I had writing it, and that you learned something new and got some insights into how I design event-driven systems and the way I think.

If event-driven and serverless architectures interest you there are a couple of more people to follow on the topic,

David Boyne, Developer advocate at AWS

Luc van Donkersgoed, AWS Serverless Hero

Sheen Brisals, AWS Serverless Hero

Allen Helton, AWS Serverless Hero

Don't forget to follow me on LinkedIn and Twitter for more content, and read rest of my Blogs