Run a java service serverless with ECS and Fargate

I normally don't write much about container workloads, most my posts are about some form of event-driven architecture with AWS Lambda, StepFunctions, and EventBridge. However, while working on a different post I realized that I needed to create an introduction to running container workloads on ECS with Fargate. But, is Fargate really serverless? Well I would say that for an container service it's serverless.

Architecture Overview

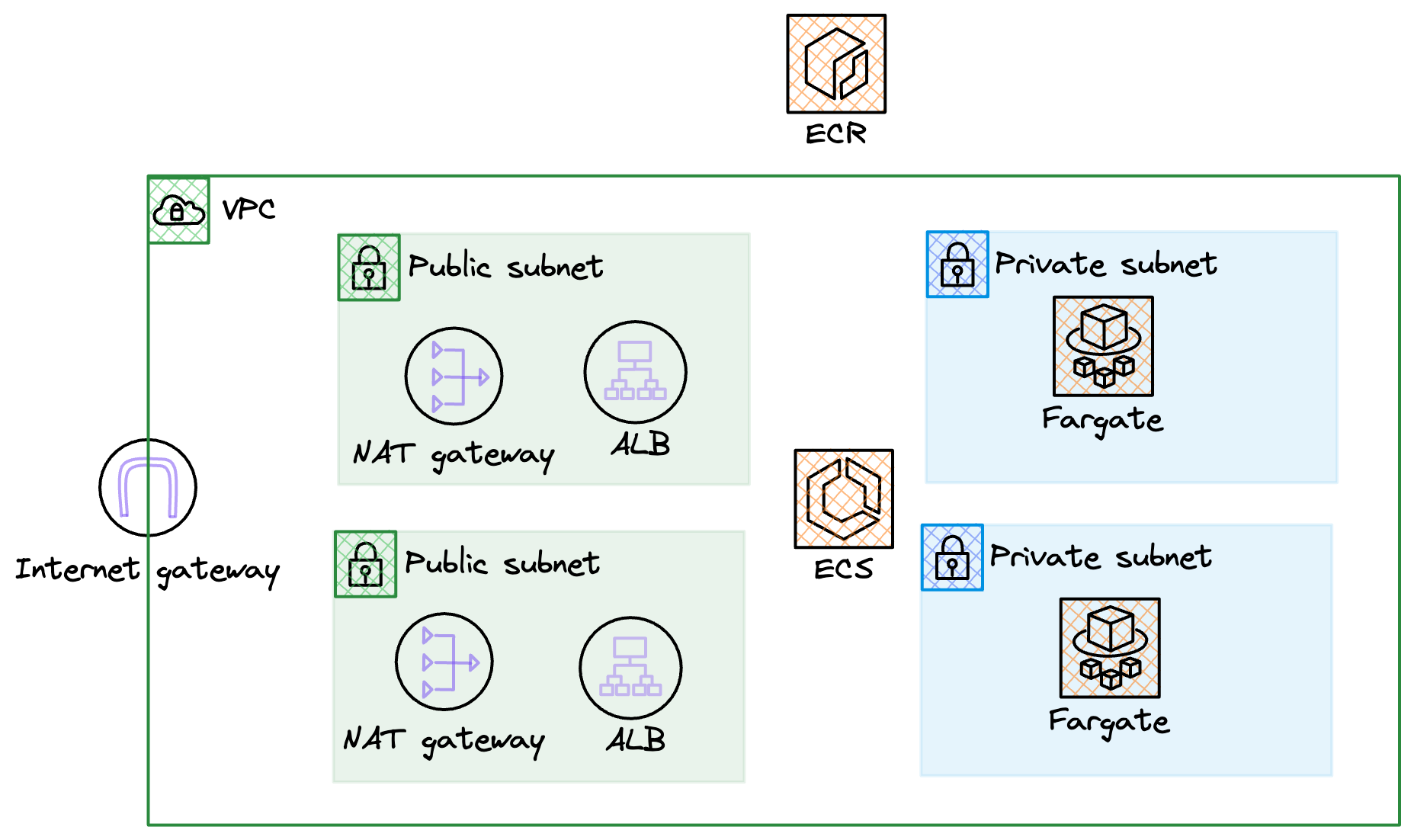

First of all, let us do an overview of the architecture that we need to create. When running a container workload on ECS we need to create a VPC to run in. We'll create a VPC with two public, two private subnets, a NAT Gateway in each public subnet (with routing from the private), an Internet Gateway, and of course all the needed Security Groups. There will be one Application Load Balancer (ALB) that spans both of the public subnets, the ALB will send traffic to the Fargate based containers running in the ECS cluster in each of the private subnets. Container images will be pulled from ECR by ECS when starting up new containers. Fairly basic architecture, so let's go build!

Deploy VPC

To deploy the VPC let's turn to CloudFormation and SAM CLI. I'll be using this template that will create our basic VPC, mentioned in the overview section.

AWSTemplateFormatVersion: "2010-09-09"

Description: Setup basic VPC

Parameters:

Application:

Type: String

IPSuperSet:

Type: String

Description: The IP Superset to use for the VPC CIDR range, e.g 10.0

Default: "10.0"

Resources:

VPC:

Type: AWS::EC2::VPC

Properties:

EnableDnsSupport: true

EnableDnsHostnames: true

CidrBlock: !Sub "${IPSuperSet}.0.0/16"

Tags:

- Key: Name

Value: !Ref Application

PublicSubnetOne:

Type: AWS::EC2::Subnet

Properties:

AvailabilityZone:

Fn::Select:

- 0

- Fn::GetAZs: { Ref: "AWS::Region" }

VpcId: !Ref VPC

CidrBlock: !Sub ${IPSuperSet}.0.0/24

MapPublicIpOnLaunch: true

Tags:

- Key: Name

Value: !Sub ${Application}-public-one

PublicSubnetTwo:

Type: AWS::EC2::Subnet

Properties:

AvailabilityZone:

Fn::Select:

- 1

- Fn::GetAZs: { Ref: "AWS::Region" }

VpcId: !Ref VPC

CidrBlock: !Sub ${IPSuperSet}.1.0/24

MapPublicIpOnLaunch: true

Tags:

- Key: Name

Value: !Sub ${Application}-public-two

PrivateSubnetOne:

Type: AWS::EC2::Subnet

Properties:

AvailabilityZone:

Fn::Select:

- 0

- Fn::GetAZs: { Ref: "AWS::Region" }

VpcId: !Ref VPC

CidrBlock: !Sub ${IPSuperSet}.2.0/24

MapPublicIpOnLaunch: false

Tags:

- Key: Name

Value: !Sub ${Application}-private-one

PrivateSubnetTwo:

Type: AWS::EC2::Subnet

Properties:

AvailabilityZone:

Fn::Select:

- 1

- Fn::GetAZs: { Ref: "AWS::Region" }

VpcId: !Ref VPC

CidrBlock: !Sub ${IPSuperSet}.3.0/24

MapPublicIpOnLaunch: false

Tags:

- Key: Name

Value: !Sub ${Application}-private-two

InternetGateway:

Type: AWS::EC2::InternetGateway

Properties:

Tags:

- Key: Name

Value: !Ref Application

GatewayAttachement:

Type: AWS::EC2::VPCGatewayAttachment

Properties:

VpcId: !Ref VPC

InternetGatewayId: !Ref InternetGateway

NatGatewayIpOne:

Type: AWS::EC2::EIP

Properties:

Domain: vpc

Tags:

- Key: Name

Value: !Sub ${Application}-natgateway-one

NatGatewayOne:

Type: AWS::EC2::NatGateway

Properties:

AllocationId: !GetAtt NatGatewayIpOne.AllocationId

SubnetId: !Ref PublicSubnetOne

Tags:

- Key: Name

Value: !Sub ${Application}-natgateway-one

NatGatewayIpTwo:

Type: AWS::EC2::EIP

Properties:

Domain: vpc

Tags:

- Key: Name

Value: !Sub ${Application}-natgateway-two

NatGatewayTwo:

Type: AWS::EC2::NatGateway

Properties:

AllocationId: !GetAtt NatGatewayIpTwo.AllocationId

SubnetId: !Ref PublicSubnetTwo

Tags:

- Key: Name

Value: !Sub ${Application}-natgateway-two

PublicRouteTable:

Type: AWS::EC2::RouteTable

Properties:

VpcId: !Ref VPC

Tags:

- Key: Name

Value: !Sub ${Application}-public-rt

PublicRoute:

Type: AWS::EC2::Route

DependsOn: GatewayAttachement

Properties:

RouteTableId: !Ref PublicRouteTable

DestinationCidrBlock: 0.0.0.0/0

GatewayId: !Ref InternetGateway

PublicSubnetOneRouteTableAssociation:

Type: AWS::EC2::SubnetRouteTableAssociation

Properties:

SubnetId: !Ref PublicSubnetOne

RouteTableId: !Ref PublicRouteTable

PublicSubnetTwoRouteTableAssociation:

Type: AWS::EC2::SubnetRouteTableAssociation

Properties:

SubnetId: !Ref PublicSubnetTwo

RouteTableId: !Ref PublicRouteTable

PrivateRouteTableOne:

Type: AWS::EC2::RouteTable

Properties:

VpcId: !Ref VPC

Tags:

- Key: Name

Value: !Sub ${Application}-private-rt-one

PrivateRouteOne:

Type: AWS::EC2::Route

DependsOn: NatGatewayOne

Properties:

RouteTableId: !Ref PrivateRouteTableOne

DestinationCidrBlock: 0.0.0.0/0

NatGatewayId: !Ref NatGatewayOne

PrivateSubnetOneRouteTableAssociation:

Type: AWS::EC2::SubnetRouteTableAssociation

Properties:

SubnetId: !Ref PrivateSubnetOne

RouteTableId: !Ref PrivateRouteTableOne

PrivateRouteTableTwo:

Type: AWS::EC2::RouteTable

Properties:

VpcId: !Ref VPC

Tags:

- Key: Name

Value: !Sub ${Application}-private-rt-two

PrivateRouteTwo:

Type: AWS::EC2::Route

DependsOn: NatGatewayTwo

Properties:

RouteTableId: !Ref PrivateRouteTableTwo

DestinationCidrBlock: 0.0.0.0/0

NatGatewayId: !Ref NatGatewayTwo

PrivateSubnetTwoRouteTableAssociation:

Type: AWS::EC2::SubnetRouteTableAssociation

Properties:

SubnetId: !Ref PrivateSubnetTwo

RouteTableId: !Ref PrivateRouteTableTwo

Outputs:

VpcId:

Description: The ID of the VPC

Value: !Ref VPC

Export:

Name: !Sub ${AWS::StackName}:VpcId

VpcCidr:

Description: The Cidr of the VPC

Value: !Sub ${IPSuperSet}.0.0/16

Export:

Name: !Sub ${AWS::StackName}:Cidr

PublicSubnetOne:

Description: The Public Subnet One

Value: !Ref PublicSubnetOne

Export:

Name: !Sub ${AWS::StackName}:PublicSubnetOne

PublicSubnetTwo:

Description: The Public Subnet Two

Value: !Ref PublicSubnetTwo

Export:

Name: !Sub ${AWS::StackName}:PublicSubnetTwo

PrivateSubnetOne:

Description: The Private Subnet One

Value: !Ref PrivateSubnetOne

Export:

Name: !Sub ${AWS::StackName}:PrivateSubnetOne

PrivateSubnetTwo:

Description: The Private Subnet Two

Value: !Ref PrivateSubnetTwo

Export:

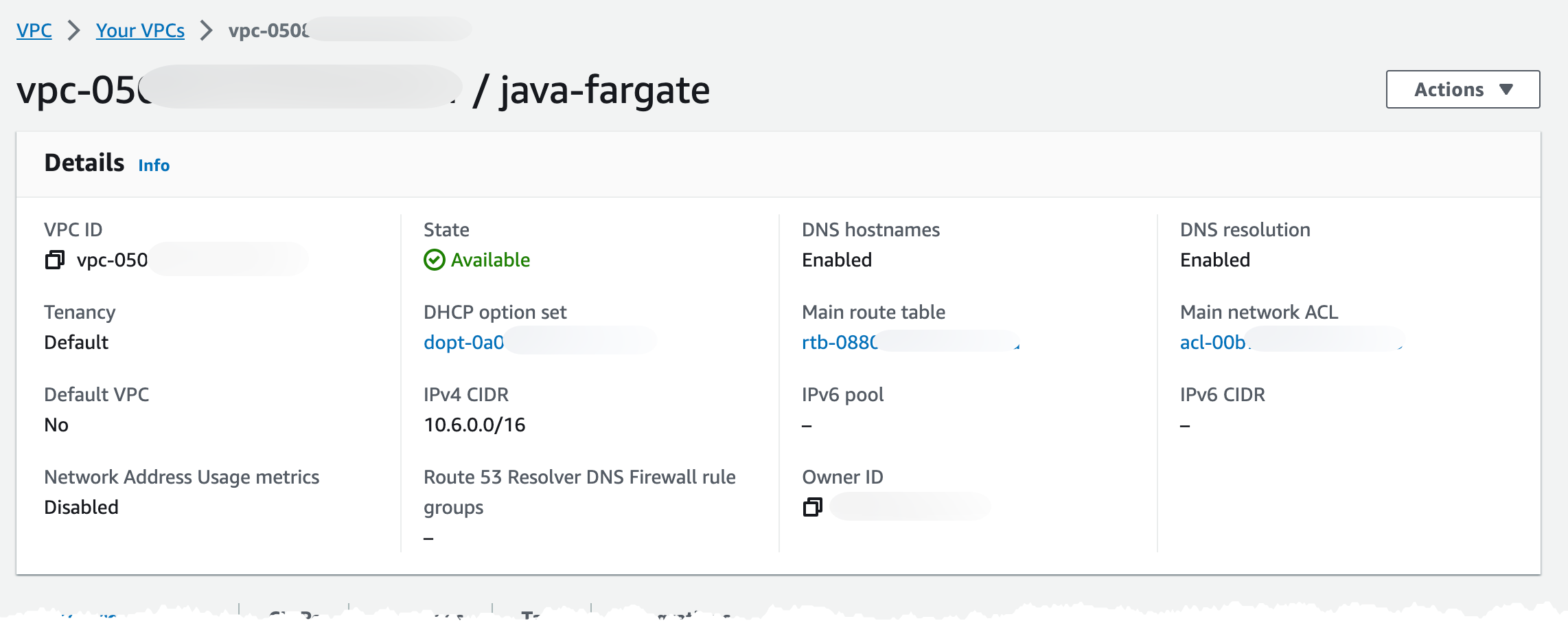

Name: !Sub ${AWS::StackName}:PrivateSubnetTwoAfter deployment we should end up with a VPC looking like this. I decided to use a 10.6.0.0 cidr.

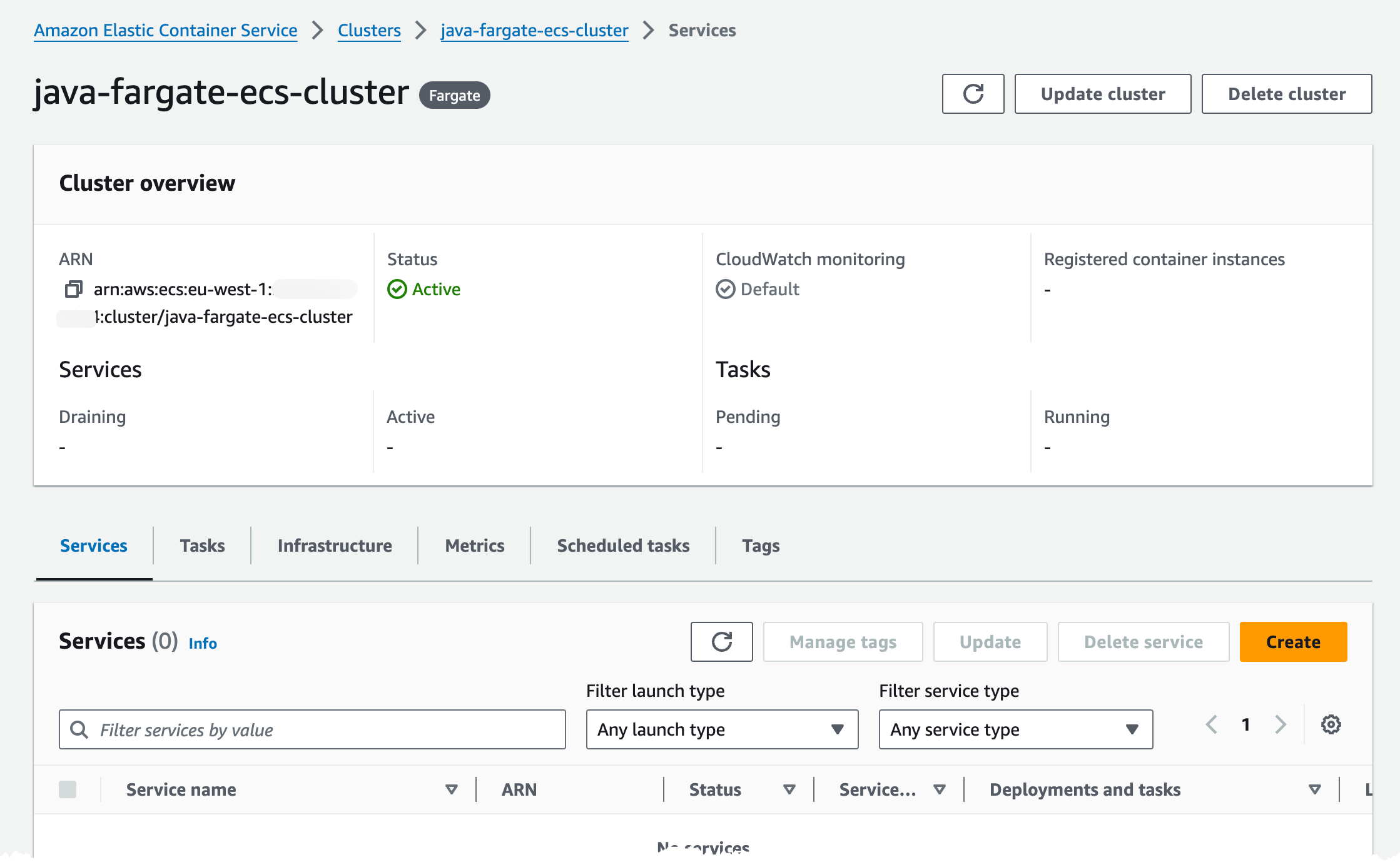

If we scroll down a bit we can see the resource map, this shows the current routing setup (the grey lines). It shows that we route the public subnets to the Internet Gateway and the private subnets are routed to each corresponding NAT Gateway. We can also see that the default route table, highlighted with the red arrow which is created automatically, is not used.

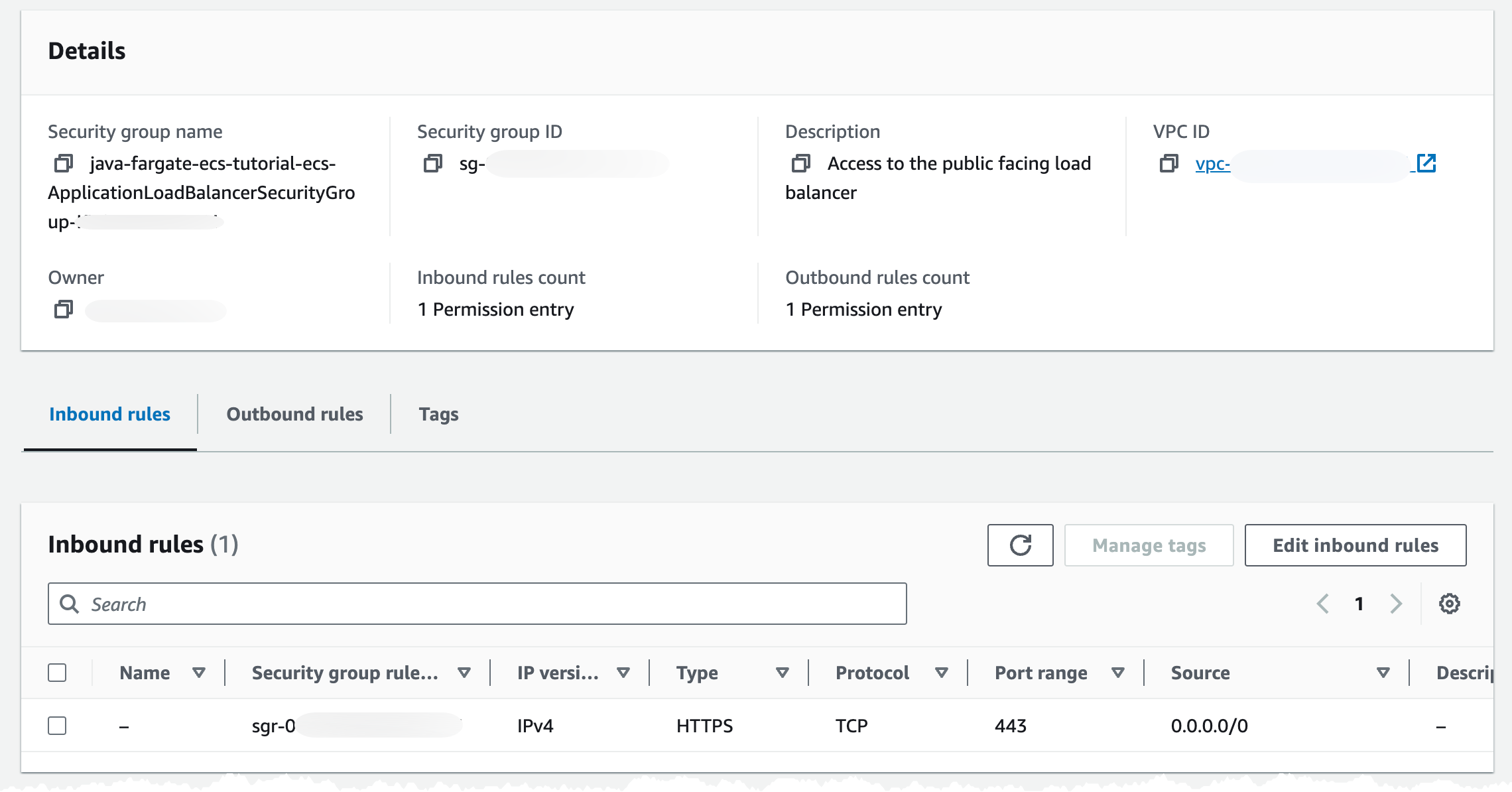

Deploy ECS and ALB

With the VPC in place we can deploy the ECS cluster and the ALB. We'll also create the security groups needed for communication. On the ALB we'll allow traffic on port 443, then on the ECS cluster we'll create a security group that allow traffic on port 8080 (port used by our service later) coming from the ALB only. On the ALB we'll create a listener with a rule that will only return a static response of 503, this will be our default rule action. When we later deploy our service it will create a new rule for routing. This way we can decouple the ALB from the services, and just have our services hook into the ALB. For the ECS cluster we'll create and attach an IAM Role so it can communicate with ECR, which is needed to pull images.

In this scenario we need a hosted zone in Route53, the reason for that is so we can create use secure connections to the ALB, HTTPS. We need to create an DNS record and an certificate in Certificate Manager. If you don't have an Route53 hosted zone to create DNS records in, you need to change the ALB security group and listener to port 80 instead of port 443.

We'll deploy this template to create all the resources needed.

AWSTemplateFormatVersion: 2010-09-09

Description: Setup Infrastructure for services and tasks to run in

Parameters:

Application:

Type: String

VPCStackName:

Type: String

Description: Name of the Stack that created the VPC

DomainName:

Type: String

Description: Domain name to use for the ALB

HostedZoneId:

Type: String

Description: Hosted Zone ID for the Route53 hosted zone for the DomainName

Resources:

ECSCluster:

Type: AWS::ECS::Cluster

Properties:

ClusterName: !Sub ${Application}-ecs-cluster

ClusterSettings:

- Name: containerInsights

Value: disabled

CapacityProviders:

- FARGATE

- FARGATE_SPOT

DefaultCapacityProviderStrategy:

- CapacityProvider: FARGATE

Weight: 1

ServiceConnectDefaults: ServiceConnectDefaults

Tags:

- Key: Name

Value: !Sub ${Application}

ECSClusterSecurityGroup:

Type: AWS::EC2::SecurityGroup

Properties:

GroupName: !Sub ${Application}-cluster-security-group

GroupDescription: Security group for cluster

VpcId:

Fn::ImportValue: !Sub ${VPCStackName}:VpcId

SecurityGroupIngress:

- IpProtocol: tcp

FromPort: 8080

ToPort: 8080

SourceSecurityGroupId: !GetAtt ApplicationLoadBalancerSecurityGroup.GroupId

SecurityGroupEgress:

- IpProtocol: tcp

FromPort: 0

ToPort: 65535

CidrIp: 0.0.0.0/0

Tags:

- Key: Name

Value: !Sub ${Application}-cluster-security-group

ECSClusterTaskExecutionRole:

Type: AWS::IAM::Role

Properties:

RoleName: !Sub ${Application}-cluster-role

AssumeRolePolicyDocument:

Statement:

- Effect: Allow

Principal:

Service:

- ecs-tasks.amazonaws.com

Action:

- sts:AssumeRole

Policies:

- PolicyName: !Sub ${Application}-cluster-role-policy

PolicyDocument:

Statement:

- Effect: Allow

Action:

- ecr:GetAuthorizationToken

- ecr:BatchCheckLayerAvailability

- ecr:GetDownloadUrlForLayer

- ecr:BatchGetImage

- logs:CreateLogStream

- logs:PutLogEvents

Resource: "*"

Tags:

- Key: Name

Value: !Sub ${Application}-cluster-role

ApplicationLoadBalancerSecurityGroup:

Type: AWS::EC2::SecurityGroup

Properties:

GroupDescription: Access to the public facing load balancer

VpcId:

Fn::ImportValue: !Sub ${VPCStackName}:VpcId

SecurityGroupIngress:

- IpProtocol: tcp

FromPort: 443

ToPort: 443

CidrIp: 0.0.0.0/0

Tags:

- Key: Name

Value: !Sub ${Application}-alb-sg

ApplicationLoadBalancerCertificate:

Type: AWS::CertificateManager::Certificate

Properties:

CertificateTransparencyLoggingPreference: ENABLED

DomainName: !Ref DomainName

ValidationMethod: DNS

DomainValidationOptions:

- DomainName: !Ref DomainName

HostedZoneId: !Ref HostedZoneId

ApplicationLoadBalancer:

Type: AWS::ElasticLoadBalancingV2::LoadBalancer

Properties:

Scheme: internet-facing

Name: !Sub ${Application}-alb

Type: application

Subnets:

- Fn::ImportValue: !Sub ${VPCStackName}:PublicSubnetOne

- Fn::ImportValue: !Sub ${VPCStackName}:PublicSubnetTwo

SecurityGroups:

- !Ref ApplicationLoadBalancerSecurityGroup

Tags:

- Key: Name

Value: !Sub ${Application}-alb

ApplicationLoadBalancerListener:

Type: AWS::ElasticLoadBalancingV2::Listener

Properties:

DefaultActions:

- FixedResponseConfig:

StatusCode: 503

Type: fixed-response

LoadBalancerArn: !Ref ApplicationLoadBalancer

Port: 443

Protocol: HTTPS

Certificates:

- CertificateArn: !Ref ApplicationLoadBalancerCertificate

ApplicationLoadBalancerServiceDNS:

Type: AWS::Route53::RecordSetGroup

Properties:

HostedZoneId: !Ref HostedZoneId

Comment: Zone alias targeted to ApplicationLoadBalancer

RecordSets:

- Name: !Ref DomainName

Type: A

AliasTarget:

DNSName: !GetAtt ApplicationLoadBalancer.DNSName

HostedZoneId: !GetAtt ApplicationLoadBalancer.CanonicalHostedZoneID

Outputs:

ECSCluster:

Description: The ECS Cluster

Value: !Ref ECSCluster

Export:

Name: !Sub ${AWS::StackName}:ecs-cluster

ECSClusterTaskExecutionRoleArn:

Value: !GetAtt ECSClusterTaskExecutionRole.Arn

Export:

Name: !Sub ${AWS::StackName}:cluster-role

ECSClusterSecurityGroupArn:

Value: !Ref ECSClusterSecurityGroup

Export:

Name: !Sub ${AWS::StackName}:cluster-sg

ApplicationLoadBalancerSecurityGroupArn:

Description: The Load balancer Security Group

Value: !Ref ApplicationLoadBalancerSecurityGroup

Export:

Name: !Sub ${AWS::StackName}:alb-sg

ApplicationLoadBalancerArn:

Description: The Load balancer ARN

Value: !Ref ApplicationLoadBalancer

Export:

Name: !Sub ${AWS::StackName}:alb-arn

ApplicationLoadBalancerListener:

Description: The Load balancer listener

Value: !Ref ApplicationLoadBalancerListener

Export:

Name: !Sub ${AWS::StackName}:alb-listener

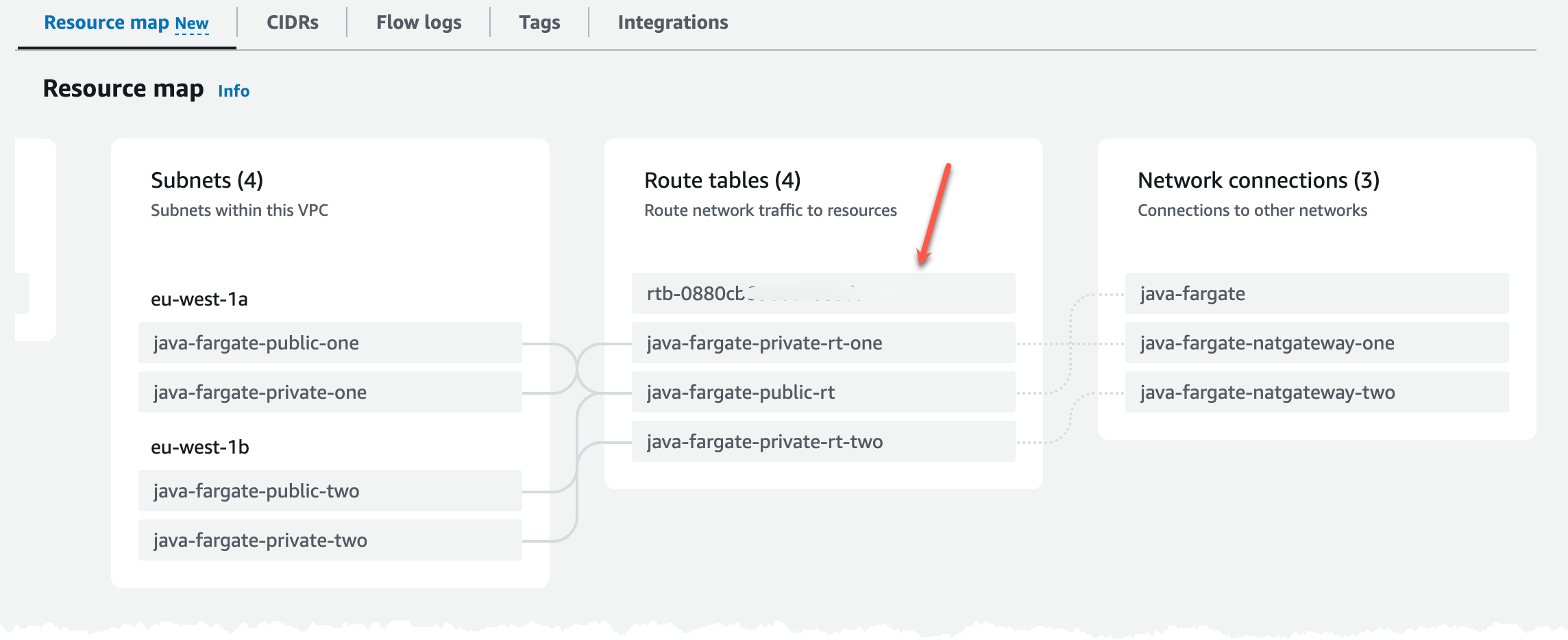

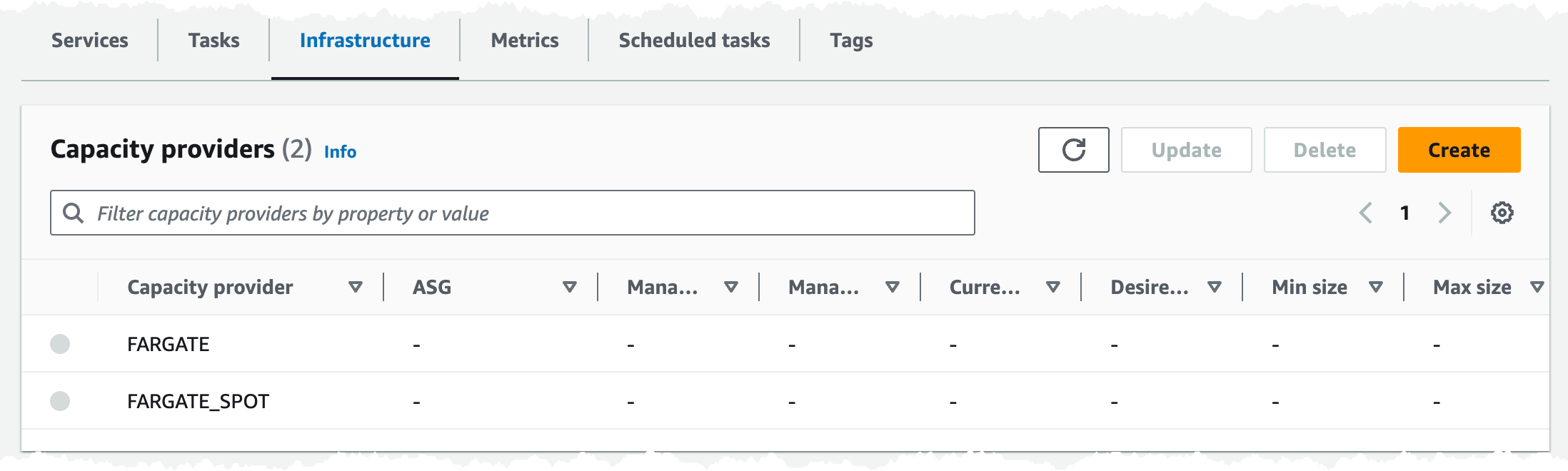

Looking in the console we should now have an ECS cluster looking something like this. We can see that we don't have any services or tasks running at this point, that has not been deployed yet.

We can also see that we use both Fargate and Fargate Spot placement strategy in the cluster.

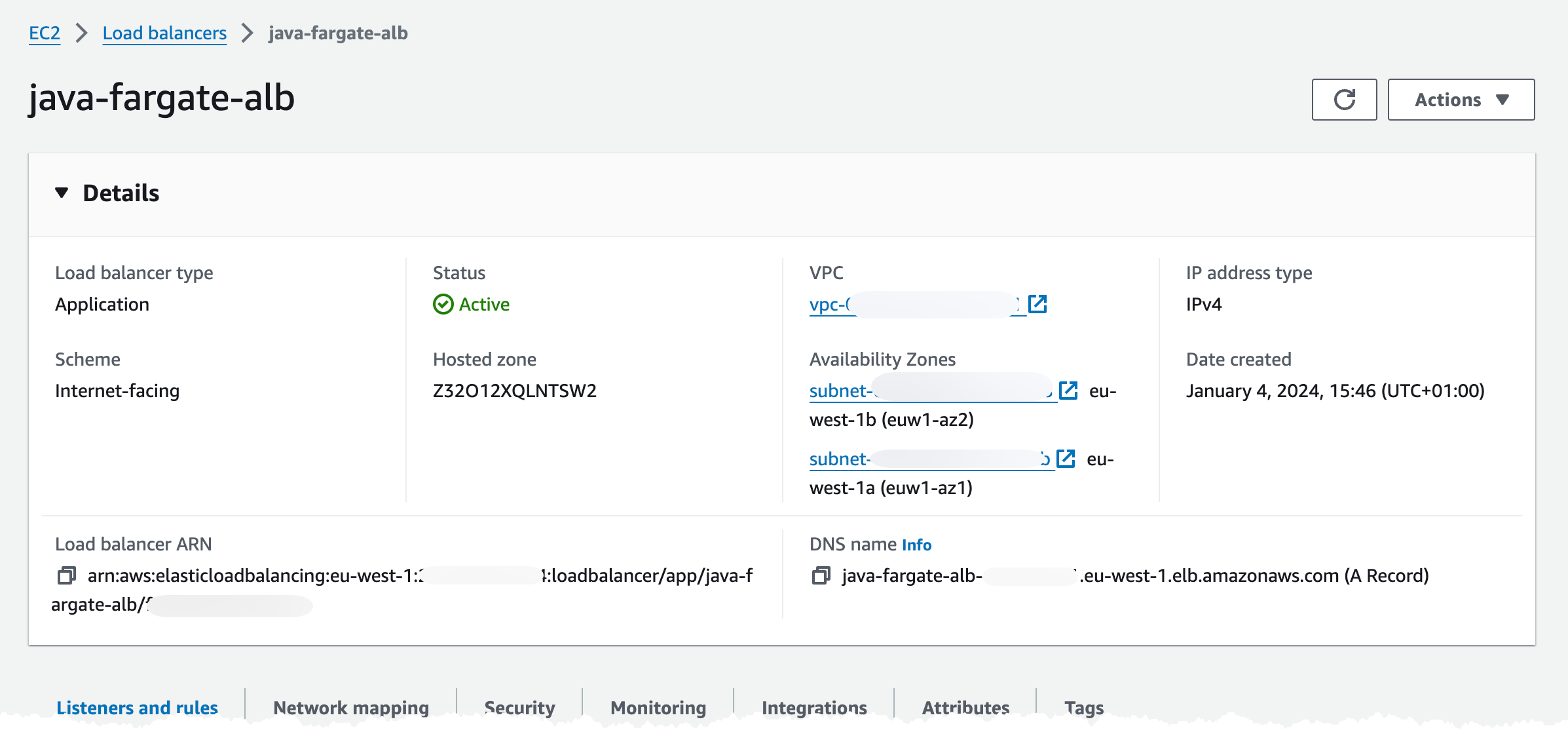

Turning to the ALB it has been deployed to two subnets in our VPC.

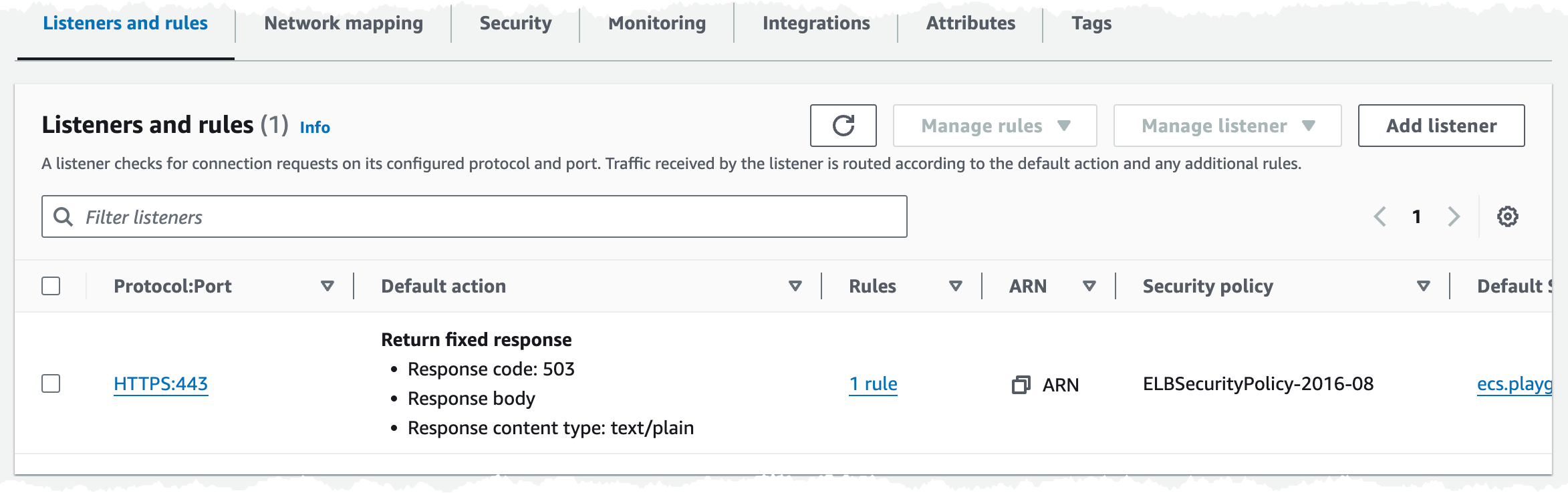

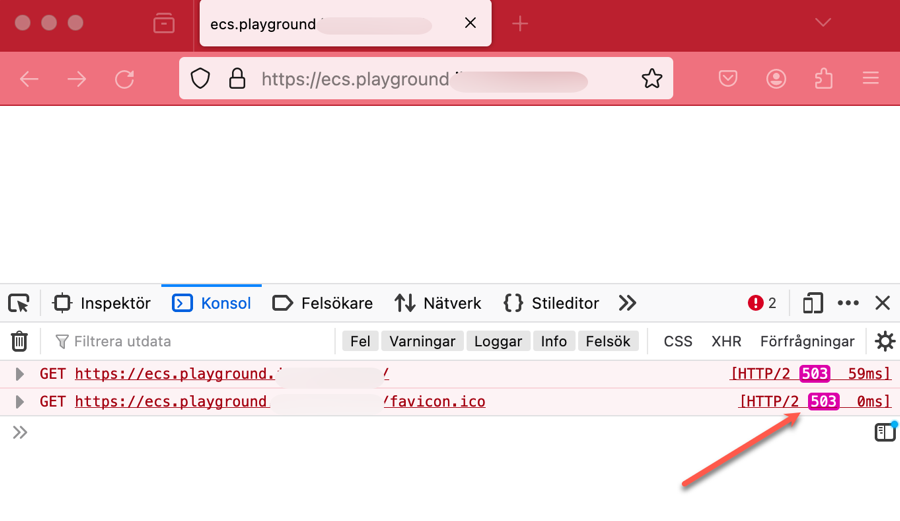

In the Listeners and rules tab, we can check that we got one listener rule with a fixed 503 response, on port 443.

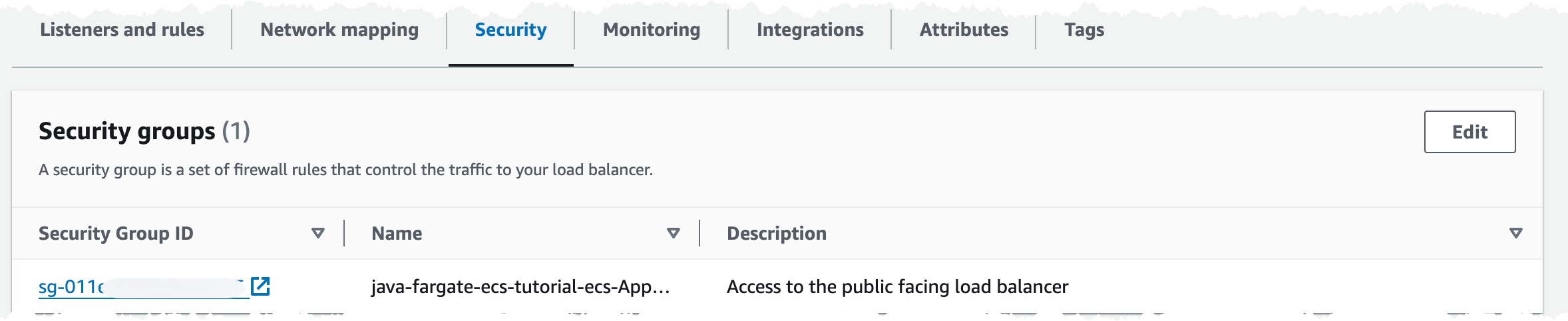

In the Security tab we have one Security Group associated, and if we check that group is should allow traffic on port 443 from internet.

We can check that we get a 503 response back by navigating to the DNS record we created.

Now the basic infrastructure is created and we can deploy our decouple application service.

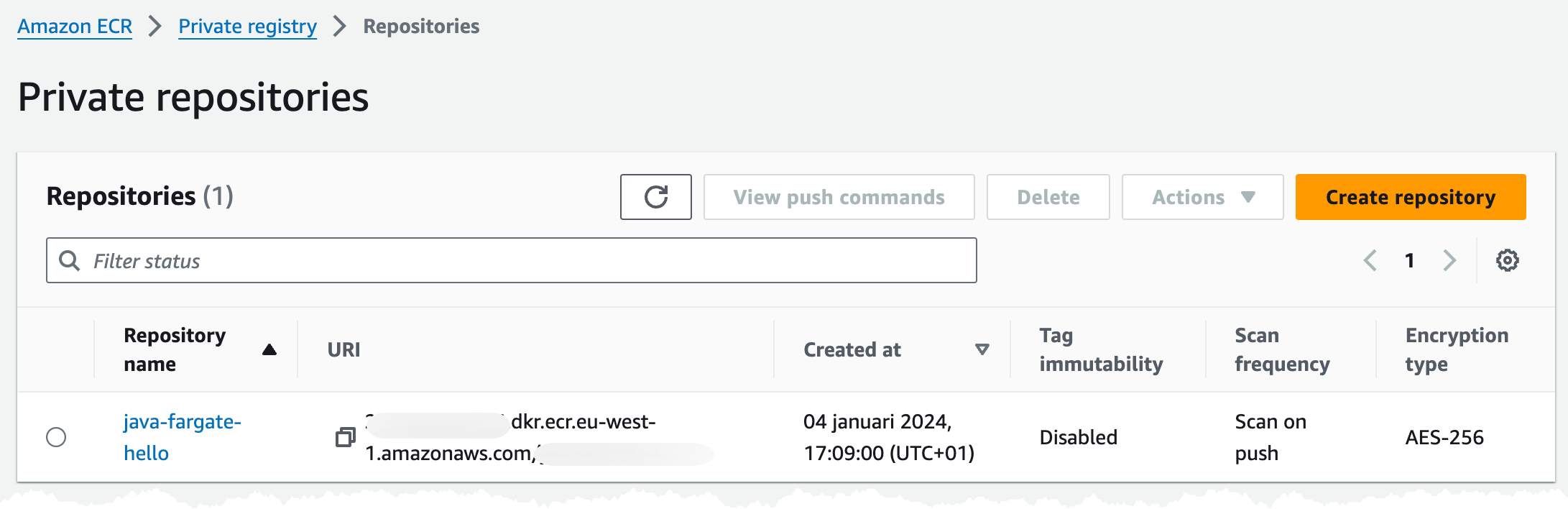

Deploy ECR

The first thing our application service needs is an ECR repository where we can push our images. To create that we deploy this short template. We ensure that we have security scanning on push enabled, that way ECR will scan our image for security vulnerabilities when we push a new image.

AWSTemplateFormatVersion: 2010-09-09

Description: Setup and configure the Service ECR repository

Parameters:

Application:

Type: String

Description: Name of the application owning all resources

Service:

Type: String

Description: Name of the service

Resources:

EcrRepository:

Type: AWS::ECR::Repository

Properties:

RepositoryName: !Sub ${Application}-${Service}

ImageScanningConfiguration:

ScanOnPush: True

DeletionPolicy: Retain

UpdateReplacePolicy: Retain

Outputs:

ECRRepositoryName:

Value: !Ref EcrRepository

Export:

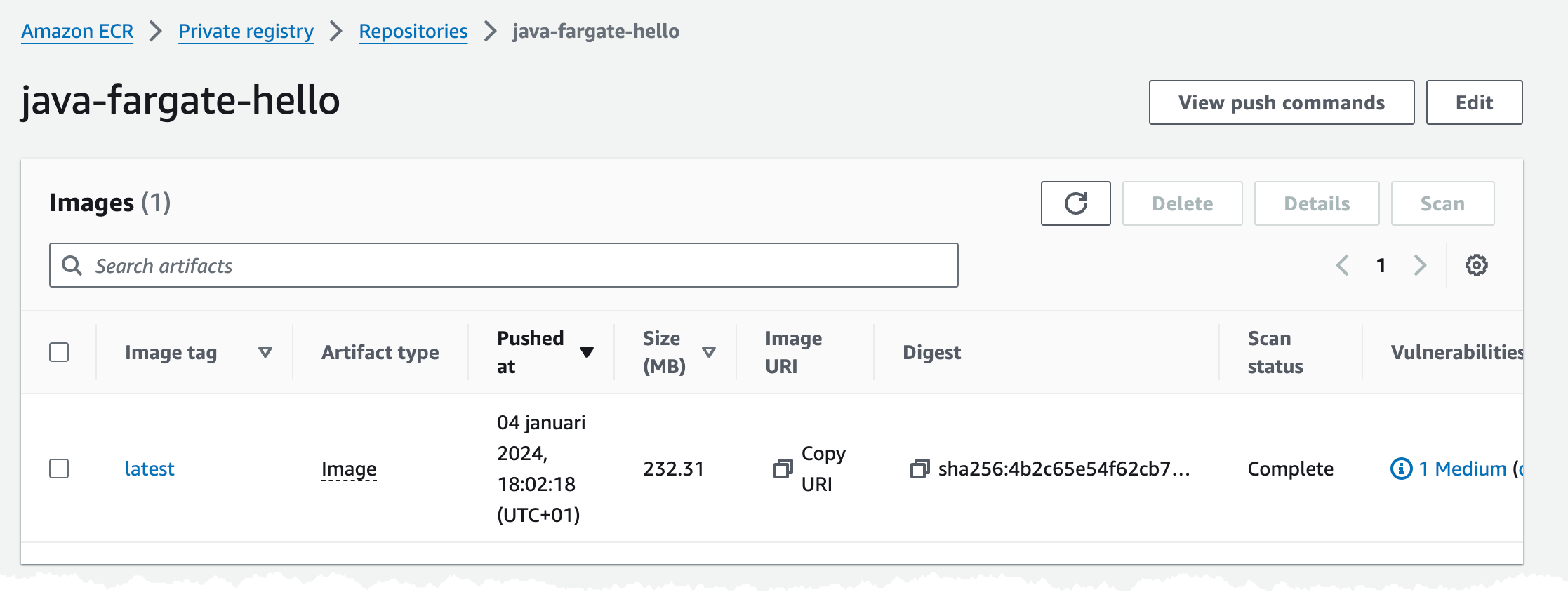

Name: !Sub ${AWS::StackName}:ecr-repositoryIn the console we should see an empty repository looking like this.

Build and push application image

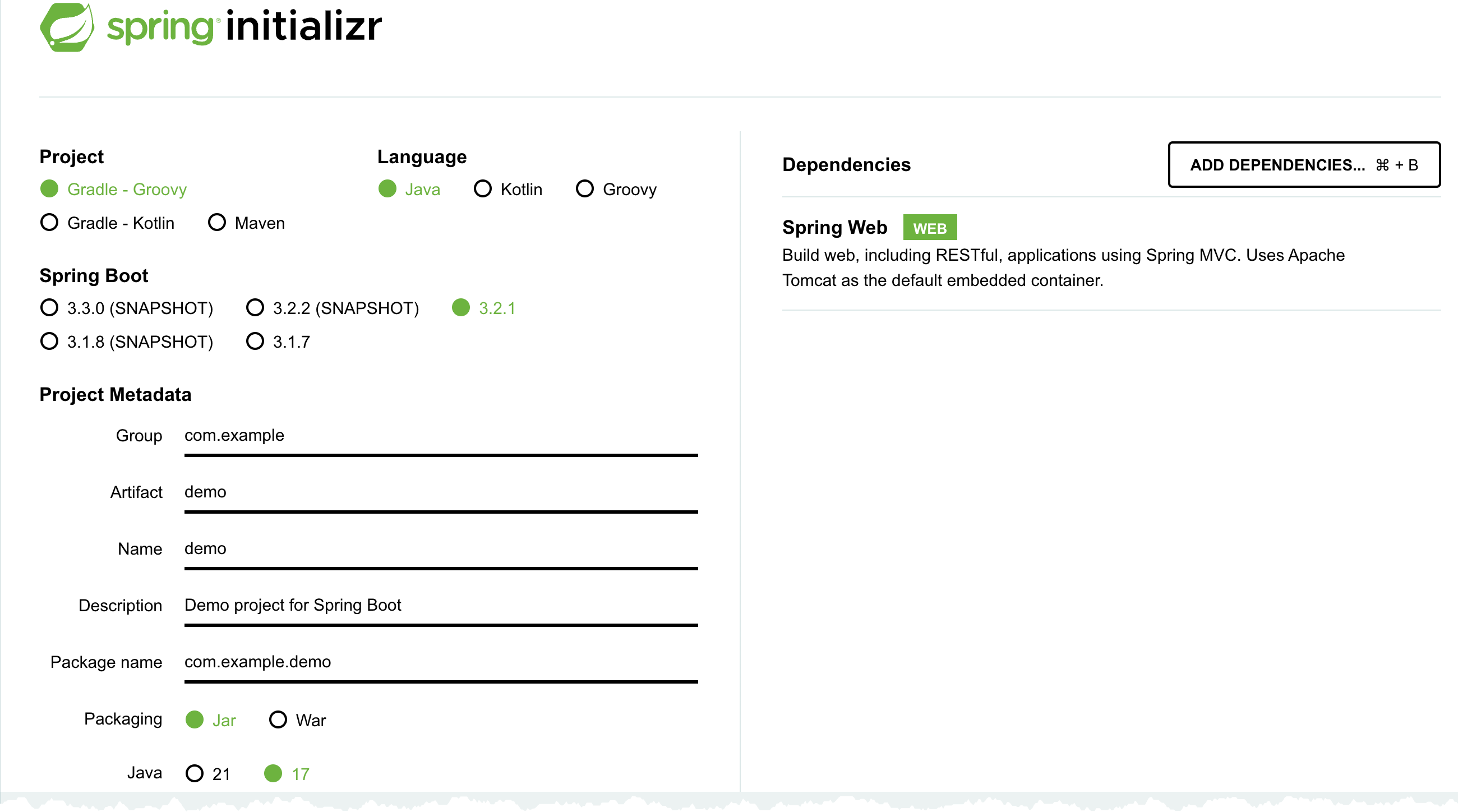

The first thing we now need to do is to build our application service and the container image. In this example I will create a simple Hello World service using Java, SpringBoot, and Docker. I will build the application using Gradle.

An easy way to create the skeleton project is to visit Spring Initializr, I set the Project to Gradle - Groovy, Language to Java, and add Spring Web dependency. Then it's just to generate the project and unpack it.

Make sure you can build the project using command

./gradlew clean buildWith that out of the way we can package our application in an container (Docker) image and push that to ECR, before you start this step make sure you have installed Docker and that it's running.

My Docker file looks like this, where I base my image of the Amazon Corretto Java 17 image. I copy the built Java application to application.jar and start this using Java -jar command.

FROM amazoncorretto:17

VOLUME /tmp

COPY ./build/libs/demo-*.jar application.jar

ENV PORT 8080

EXPOSE $PORT

ENTRYPOINT ["java","-jar","/application.jar"]To build the image run the docker buildx command, I set the platform to linux/amd64 since I'm doing this from an Macbook with Apple CPU, which is ARM based, but in AWS we will run on X86 Intel based CPU.

docker buildx build --platform linux/amd64 -t java-demo:latest .To run and test this locally we run command

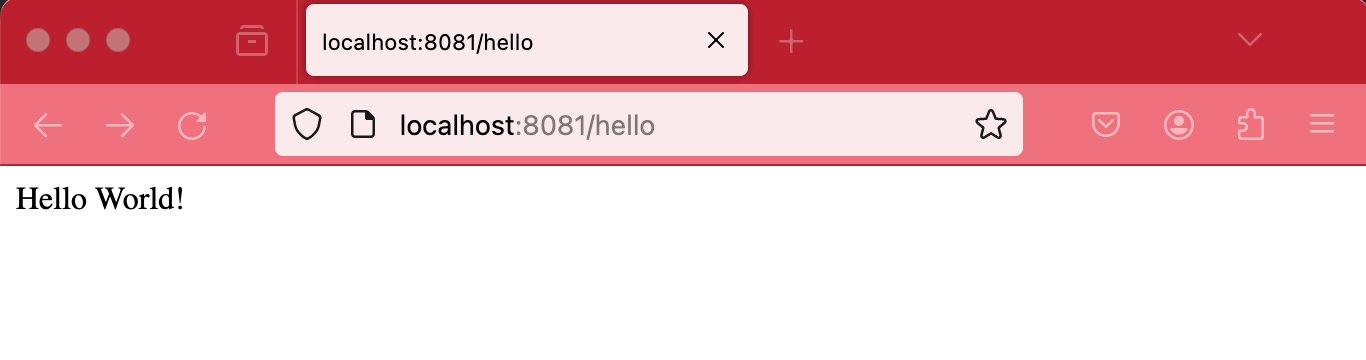

docker run -p 8081:8080 java-demo:latestIn the command I map port 8081 on my local machine to port 8080 in the container, with it running I can open up http://localhost:8081/hello in the browser, that should render a Hello World response.

When things are running we can push the image to ECR and continue our deployment.

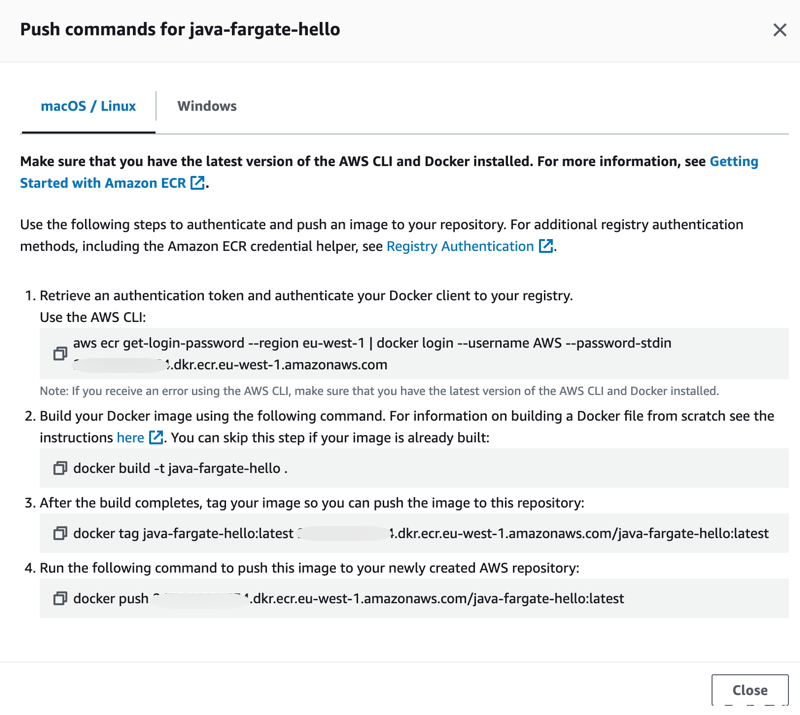

When pushing an image to ECR we first of all need to authenticate Docker to the login-password using the get-login-password cli command. More details in the documentation. After getting authenticated we can go ahead and push the image. The commands we need to run looks like this.

aws ecr get-login-password --region REGION | docker login -u AWS --password-stdin AWS_ACCOUNT.dkr.ecr.eu-west-1.amazonaws.com

IMAGE="AWS_ACCOUNT.dkr.ecr.eu-west-1.amazonaws.com/REPOSITORY_NAME:TAG"

docker buildx build --platform linux/amd64 --push -t $IMAGE .After running that, either locally or from our CI/CD pipeline, we should have an image in our ECR repository.

You can also get the a description of the image push commands in the console.

Time to deploy and start the service.

Deploy service infrastructure

As mentioned before in this setup I have tried to decouple each application service and its infrastructure from the common infrastructure such as the ECS cluster and the ALB. So when we now deploy the application service infrastructure, we will hook into the ALB to create a new listener rule for our service, so the ALB can route traffic to it. We'll also hook into the ECS cluster and create the Service, task definition, etc that is needed. This way we can keep the infrastructure for each application service separate from the common infrastructure. Each service is responsible for creating what it needs and hook it. One thing we need to coordinate is the ALB listener rule weight, so we don't have a collision. What I normally do is assign each service its own 100 range, meaning that service X can assign weights in range 100 to 199 and service Y can assign weights in range 200 to 299. This way services can update and create additional rule actions if needed.

Now let's deploy our template to create the infrastructure, this deployment can take some time.

AWSTemplateFormatVersion: 2010-09-09

Description: Setup and configure the Service running in Fargate

Parameters:

Application:

Type: String

Description: Name of the application owning all resources

Service:

Type: String

Description: Name of the service

ServiceTag:

Type: String

Description: The service Docker Image tag to deploy

Default: latest

VPCStackName:

Type: String

Description: Name of the Stack that created the VPC

ServiceInfraStackName:

Type: String

Description: Name of the Stack that created the service infrastructure, such as ECS cluster etc

Resources:

ServiceTargetGroup:

Type: AWS::ElasticLoadBalancingV2::TargetGroup

Properties:

Name: !Sub ${Application}-${Service}-tg

Port: 8080

Protocol: HTTP

TargetGroupAttributes:

- Key: deregistration_delay.timeout_seconds

Value: 1

TargetType: ip

VpcId:

Fn::ImportValue: !Sub ${VPCStackName}:VpcId

HealthCheckEnabled: true

HealthCheckIntervalSeconds: 5

HealthCheckTimeoutSeconds: 2

HealthCheckPath: /hello

HealthCheckPort: traffic-port

HealthCheckProtocol: HTTP

HealthyThresholdCount: 2

UnhealthyThresholdCount: 2

Tags:

- Key: Name

Value: !Sub ${Application}-${Service}-target-group

LoadBalancerRule:

Type: AWS::ElasticLoadBalancingV2::ListenerRule

Properties:

Actions:

- TargetGroupArn: !Ref ServiceTargetGroup

Type: forward

Conditions:

- Field: path-pattern

Values: [/hello]

ListenerArn:

Fn::ImportValue: !Sub ${ServiceInfraStackName}:alb-listener

Priority: 200

ServiceLogGroup:

Type: AWS::Logs::LogGroup

Properties:

LogGroupName: !Sub /ecs/${Application}/${Service}

RetentionInDays: 60

Tags:

- Key: Name

Value: !Sub ${Application}-${Service}-logs

ServiceTaskRole:

Type: AWS::IAM::Role

Properties:

Description: Role for Demo task definition to set task specific role

RoleName: !Sub ${Application}-${Service}-task-role

AssumeRolePolicyDocument:

Statement:

- Effect: Allow

Principal:

Service:

- ecs-tasks.amazonaws.com

Action:

- sts:AssumeRole

Tags:

- Key: Name

Value: !Sub ${Application}-${Service}-role

ServiceTaskDefinition:

Type: AWS::ECS::TaskDefinition

Properties:

Family: !Sub ${Application}-${Service}-task

RequiresCompatibilities:

- FARGATE

NetworkMode: awsvpc

ExecutionRoleArn:

Fn::ImportValue: !Sub ${ServiceInfraStackName}:cluster-role

TaskRoleArn: !Sub ${Application}-${Service}-task-role

Cpu: 512

Memory: 1024

ContainerDefinitions:

- Name: !Ref Service

Image: !Sub ${AWS::AccountId}.dkr.ecr.${AWS::Region}.amazonaws.com/${Application}-${Service}:${ServiceTag}

Cpu: 512

Memory: 1024

Environment:

- Name: NAME

Value: !Ref Service

PortMappings:

- ContainerPort: 8080

Protocol: tcp

LogConfiguration:

LogDriver: awslogs

Options:

awslogs-group: !Sub /ecs/${Application}/${Service}

awslogs-region: !Sub ${AWS::Region}

awslogs-stream-prefix: !Ref Service

Tags:

- Key: Name

Value: !Sub ${Application}-${Service}-task

ApplicationService:

Type: AWS::ECS::Service

DependsOn: LoadBalancerRule

Properties:

ServiceName: !Sub ${Application}-${Service}

CapacityProviderStrategy:

- Base: 1

CapacityProvider: FARGATE

Weight: 1

LaunchType: FARGATE

TaskDefinition: !Ref ServiceTaskDefinition

NetworkConfiguration:

AwsvpcConfiguration:

AssignPublicIp: DISABLED

SecurityGroups:

- Fn::ImportValue: !Sub ${ServiceInfraStackName}:cluster-sg

Subnets:

- Fn::ImportValue: !Sub ${VPCStackName}:PrivateSubnetOne

- Fn::ImportValue: !Sub ${VPCStackName}:PrivateSubnetTwo

DesiredCount: 1

LoadBalancers:

- TargetGroupArn: !Ref ServiceTargetGroup

ContainerPort: 8080

ContainerName: !Ref Service

Cluster:

Fn::ImportValue: !Sub ${ServiceInfraStackName}:ecs-cluster

HealthCheckGracePeriodSeconds: 60

DeploymentConfiguration:

MaximumPercent: 200

MinimumHealthyPercent: 50

Tags:

- Key: Name

Value: !Sub ${Application}-${Service}-service

Outputs:

ServiceTargetGroup:

Value: !Ref ServiceTargetGroup

Export:

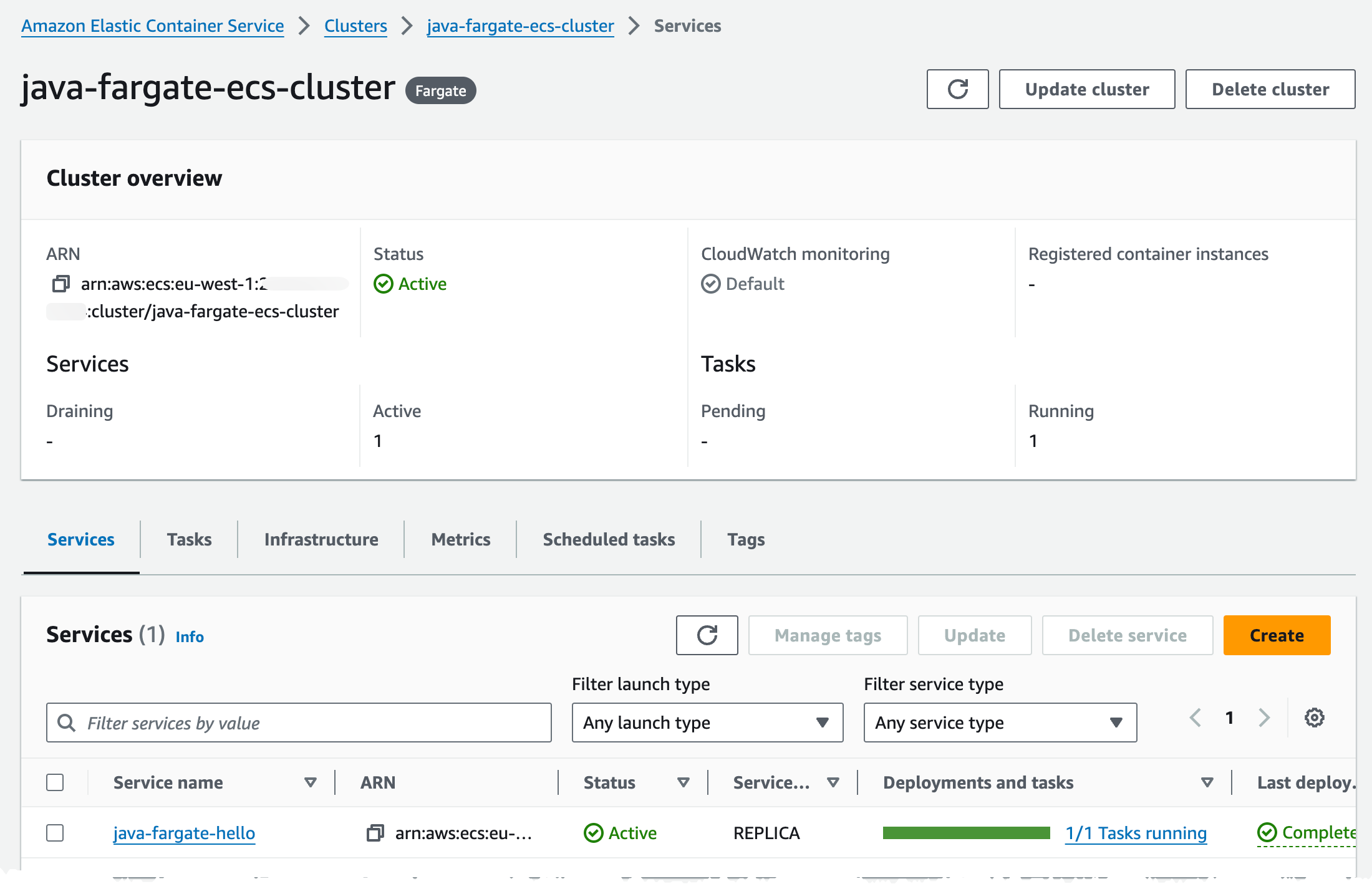

Name: !Sub ${AWS::StackName}:target-groupTurning to the console, when deployment has finished, we should have created a setup similar to this. The ECS cluster should show one service with 1/1 Running Task.

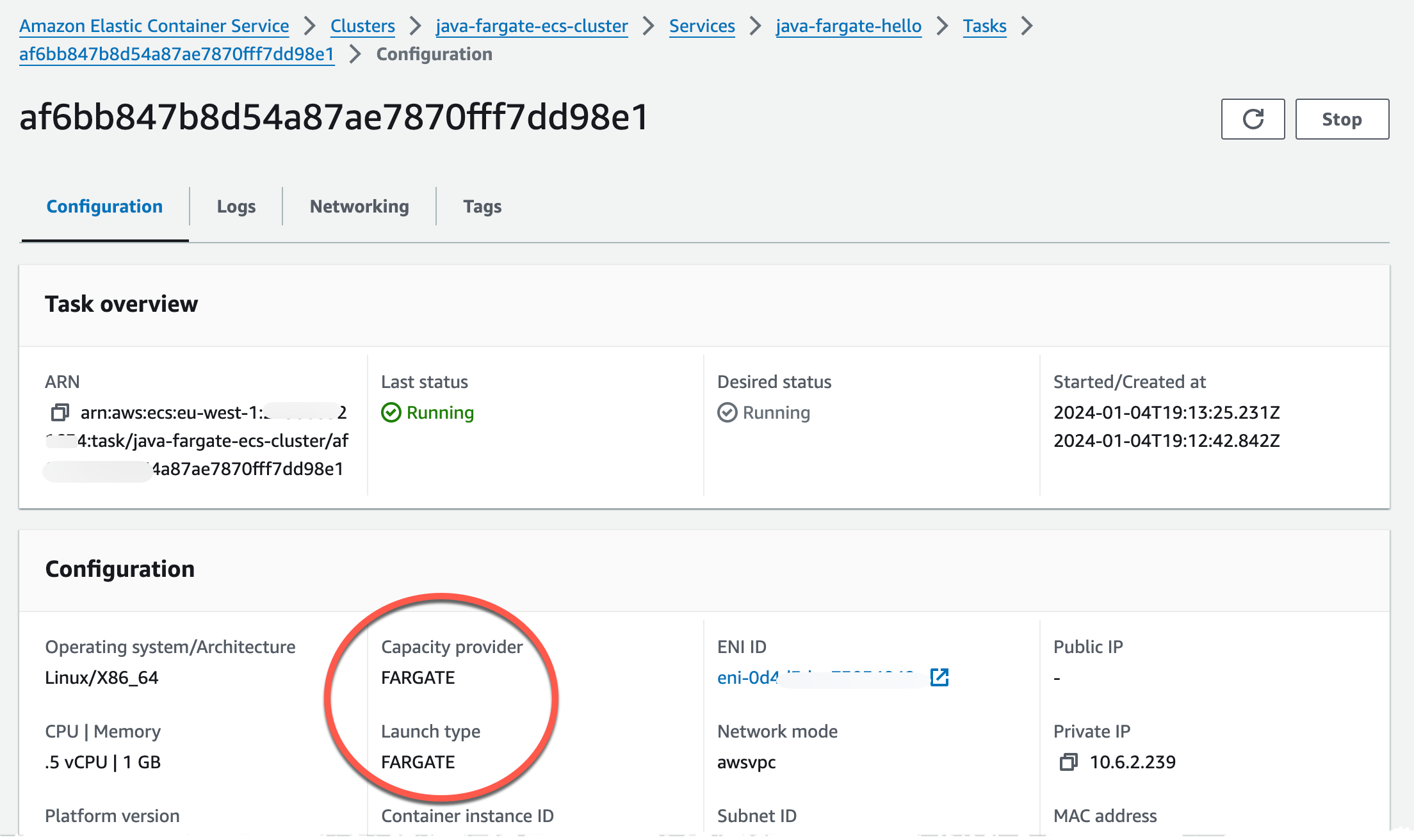

Navigating to the Task configuration, by clicking service -> Tasks -> And the Task ID will show that we run on Fargate launch type and with Fargate capacity provider strategy.

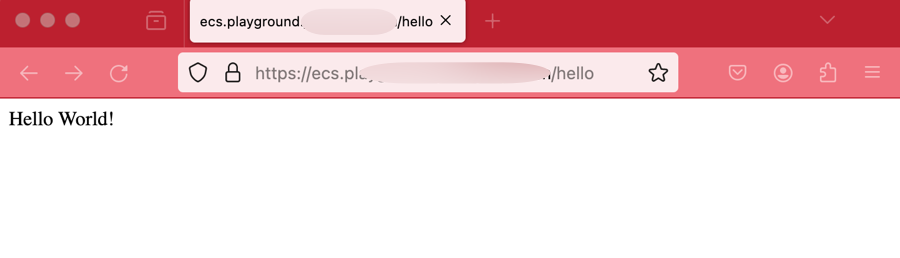

Navigating to the DNS record we created we should now see a Hello World message.

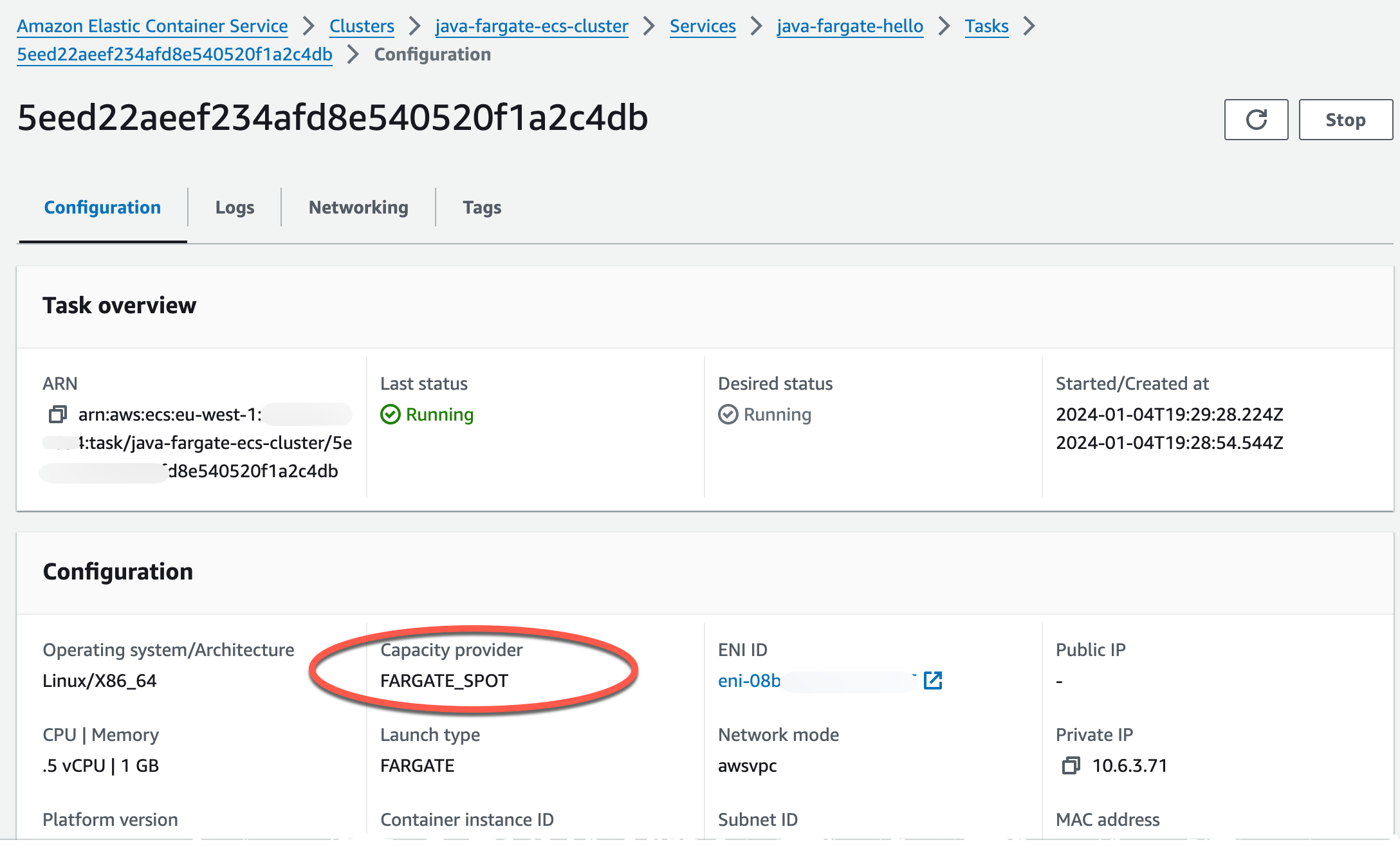

Now, to instead run this on Fargate Spot we need to update our capacity provider strategy and redeploy, so let's make a minor change to the template we used.

...

ApplicationService:

Type: AWS::ECS::Service

DependsOn: LoadBalancerRule

Properties:

ServiceName: !Sub ${Application}-${Service}

CapacityProviderStrategy:

- Base: 1

- CapacityProvider: FARGATE

+ CapacityProvider: FARGATE_SPOT

Weight: 1

LaunchType: FARGATE

TaskDefinition: !Ref ServiceTaskDefinition

NetworkConfiguration:

AwsvpcConfiguration:

AssignPublicIp: DISABLED

SecurityGroups:

- Fn::ImportValue: !Sub ${ServiceInfraStackName}:cluster-sg

Subnets:

- Fn::ImportValue: !Sub ${VPCStackName}:PrivateSubnetOne

- Fn::ImportValue: !Sub ${VPCStackName}:PrivateSubnetTwo

DesiredCount: 1

LoadBalancers:

- TargetGroupArn: !Ref ServiceTargetGroup

ContainerPort: 8080

ContainerName: !Ref Service

Cluster:

Fn::ImportValue: !Sub ${ServiceInfraStackName}:ecs-cluster

HealthCheckGracePeriodSeconds: 60

DeploymentConfiguration:

MaximumPercent: 200

MinimumHealthyPercent: 50

Tags:

- Key: Name

Value: !Sub ${Application}-${Service}-service

...

After redeployment we can see that we now run on Fargate Spot instead of on-demand.

Final Words

In this post I showed how to run a Java SpringBoot service on ECS on Fargate. We looked at the infrastructure that we need to create and a way to decouple the services and tasks from the cluster and the ALB creation process. We also looked at the changes needed to run this on Fargate Spot instead of on-demand.

Don't forget to follow me on LinkedIn and X for more content, and read rest of my Blogs