Extending My Blog with Proofreading by Amazon Nova

Blogging has over the years really become something I enjoy doing. Often, I build something and then write a post about the solution and what I learned. More than once, it has been about serverless and event-driven architecture. However, there is one thing I don't like: as a non-native English speaker, I tend to make both spelling and grammatical errors, and even if I proofread several times, I always miss something.

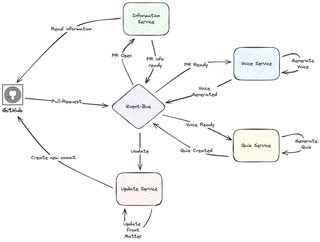

I've previously extended my blog with voice generation using Amazon Polly and gamified learning with automated quizzes.

This time, it's time for one more addition to this, which will also help me and save time. That is an automated proofreading service using Amazon Nova.

My Problem

When I write my blog posts, I focus on the solution and the architecture, presenting and showcasing that in a good way. I have much less focus on spelling, even though I do have a spelling extension installed in VSCode. Then, proofreading my posts takes a lot of time, and to be honest, I'm not always that thorough. So, what I needed was an automated solution that could:

Maintain my writing style: I don't want the proofreading to change my voice or technical explanations. Preserve markdown formatting: My blog posts are written in markdown with code blocks, links, and images. Integrate seamlessly: The solution should fit into my existing solution with voice and quiz generation. Be cost-effective: Run only on certain Pull-requests, and be serverless and event-driven just like the voice and quiz solution.

Introducing Amazon Nova

Amazon Nova is Amazon's own LLMs that were announced at re:Invent 2024. Before Nova, there were several Titan models, and to be honest, Titan wasn't that good. Nova, on the other hand, is really good and is a good, cost-efficient option. Nova models are available through Amazon Bedrock, like many other models, which provides an API for accessing various large language models.

What is Amazon Bedrock?

Amazon Bedrock is a fully managed service that makes access to a lot of foundation models easy. They are all available through a single API and it allows me to build great generative AI solutions in an easy way. Bedrock offers us:

Model choice: Access to models from Amazon, Anthropic, Meta, and several more, with new models constantly being added. Easy integration: Serverless and managed, with simple API calls without managing infrastructure. Fine-tuning capabilities: Customize models with your own data. Security and data privacy: Our data is not used to train any models. Bedrock Agents: Agents that can be used to build applications that combine LLMs and APIs to create powerful solutions.

Amazon Nova Models

Amazon Nova offers, at this moment, four different understanding/reasoning models, 2 creative models, and 1 speech model.

The understanding models can handle different inputs like text, images, video, documents, and code. They all output the response in text. These models are:

- Nova Micro: Text only, low latency and fast responses with a low cost.

- Nova Lite: Low cost multimodal model that is super fast processing text, images, and video.

- Nova Pro: Very capable multimodal model, for a good price, that offers a great balance of accuracy, speed, and cost for a wide range of tasks.

- Nova Premier: The most capable multimodal model for complex tasks, not available in that many regions.

I have selected Nova Pro for my task as, for proofreading blog posts, it provides a great balance of accuracy and cost-effectiveness. It handles understanding context, maintaining writing style, and making small corrections without over-editing my content.

The creative models are:

- Amazon Nova Canvas: A high-quality image generation model.

- Amazon Nova Reel: A video generation model.

Amazon Nova Canvas and Amazon Nova Reel are, right now, not available in that many regions. You have to use us-east-1 (N. Virginia), eu-west-1 (Ireland), or ap-northeast-1 (Tokyo).

The speech model is:

- Amazon Nova Sonic: That delivers real-time, human-like voice conversations.

Amazon Nova Sonic is, right now, available in us-east-1 (N. Virginia), eu-north-1 (Stockholm), and ap-northeast-1 (Tokyo) and supports English (US, UK) and Spanish.

Not having models available in all regions or even the same regions can become a problem when building our applications.

Cross-Region Inference

One thing we need to bring up is the cross-region inference setup in Bedrock, which is done via inference models. What this does is that our calls automatically get routed to the region with the most available resources, ensuring that our calls can be fulfilled. When using the Nova models outside of us-east-1 (N. Virginia) and AWS GovCloud (US-West), we need to use an inference profile when calling the Bedrock API. If we don't, we will get hit by an error saying the model is not available in the region in a serverless way.

As an example, if we call the eu.amazon.nova-pro-v1:0 from eu-west-1, where my solution runs, our inference call will stay within the EU region and inference can be done from one of the EU regions: eu-central-1, eu-north-1, eu-west-1, eu-west-3.

Solution Architecture

In the post gamified learning with automated quizzes, I introduce the architecture. It's built on Amazon EventBridge, divided into multiple services, and runs as a Saga Pattern.

As explained in the post above, I perform two major things: I generate the quiz for the gamified learning and I use Polly to generate voice that reads my blog post. This is a mix of GitHub actions that will build the page and upload to a staging bucket in S3. After upload to the staging bucket is complete, GitHub actions will post an event onto an EventBridge event-bus and this is where my AWS-based part takes over.

The AWS-based CI/CD pipeline is event-driven and serverless. The primary services used are StepFunctions, Lambda, and EventBridge. The flow is based on a saga pattern where the domain services hand over by posting domain events on the event-bus, which will move the saga to the next step.

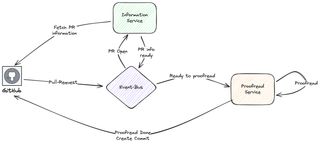

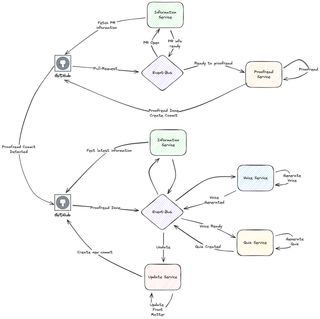

As my voice generation is done from the rendered HTML files, for most accuracy, I just couldn't plug in the proofreading as a first step in this Saga Pattern. The solution became to add GitHub actions into the saga. One day I might move away from GitHub Actions to something else, but as of now, it will be part of the entire solution.

So, I will add the following part, that now runs when my Pull-Request is opened:

In this part, I fetch information from GitHub, and call Amazon Nova via Amazon Bedrock to do my proofreading. When completed, the service adds a new commit to the PR with the updated markdown file.

Running the Voice and Quiz Parts

Now, I need a way to integrate and start the Voice and Quiz steps, that I before was running when the PR was created. Instead, I now have a GitHub Action that will detect the proofreading commit and will start that flow. Giving a solution with two distinct parts.

I have now created two distinct parts in the flow. All major logic is running on AWS and GitHub Action builds the staging blog and invokes the different parts, by sending different events to EventBridge.

Technical Deep Dive

Let's take a technical deep dive into the solution and the actual proofreading service.

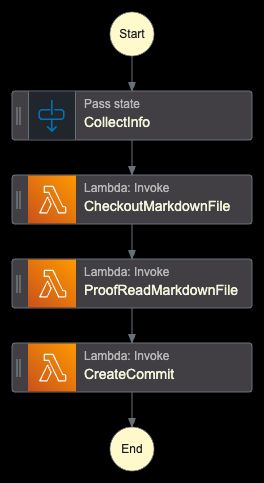

StepFunction

The heart of everything is an orchestrator implemented with StepFunctions. It will check out the files, perform proofreading, and create the new commit.

The work is basically carried out by three Lambda functions. The core Lambda function is the one performing the actual proofreading. The functions checking out the PR and creating a new commit are both using Octokit. To see how these work, check my previous posts. I will focus on the actual proofreader.

Proofreading Lambda Function

The function will initialize everything, create the prompt, and call Nova and Bedrock, and store the proofread version in S3. The original post is also kept and the file is just renamed.

import json

import os

import boto3

from botocore.exceptions import ClientError

def handler(event, context):

print("Received event:", json.dumps(event, indent=2))

try:

bucket = event.get("S3Bucket")

key = event.get("Key")

if not bucket or not key:

raise ValueError("Both 'bucket' and 'key' must be provided in the event")

s3_client = boto3.client("s3")

bedrock_client = boto3.client("bedrock-runtime", region_name="eu-west-1")

response = s3_client.get_object(Bucket=bucket, Key=key)

file_content = response["Body"].read().decode("utf-8")

prompt = f"""You are a professional technical editor and proofreader, expert in AWS and Cloud computing. Please carefully proofread the following markdown blog post for spelling and grammatical errors.

Instructions:

- Fix any spelling mistakes

- Correct grammatical errors

- Maintain the original markdown formatting

- Keep the tone and style consistent

- Do not change the meaning or structure of the content

- Return only the corrected markdown text without any additional commentary

- Do not surround the proofread version with ```markdown

Here is the markdown content to proofread:

{file_content}"""

request_body = {

"messages": [{"role": "user", "content": [{"text": prompt}]}],

"inferenceConfig": {

"temperature": 0.1,

"topP": 0.9,

"maxTokens": 10240,

},

}

bedrock_response = bedrock_client.invoke_model(

modelId="eu.amazon.nova-pro-v1:0",

body=json.dumps(request_body),

contentType="application/json",

)

response_body = json.loads(bedrock_response["body"].read())

proofread_content = response_body["output"]["message"]["content"][0]["text"]

if key.endswith(".md"):

base_name = key[:-3]

else:

base_name = key

original_backup_key = f"{base_name}-original.md"

s3_client.copy_object(

Bucket=bucket,

CopySource={"Bucket": bucket, "Key": key},

Key=original_backup_key,

)

s3_client.put_object(

Bucket=bucket,

Key=key,

Body=proofread_content.encode("utf-8"),

ContentType="text/markdown",

)

return {

"statusCode": 200,

"body": json.dumps(

{

"message": "Proofreading completed successfully",

"proofread_file": f"s3://{bucket}/{key}",

"backup_file": f"s3://{bucket}/{original_backup_key}",

"original_length": len(file_content),

"proofread_length": len(proofread_content),

}

),

}

except ClientError as e:

error_code = e.response["Error"]["Code"]

error_message = e.response["Error"]["Message"]

print(f"AWS Client Error ({error_code}): {error_message}")

return {

"statusCode": 500,

"body": json.dumps(

{

"error": "AWS Client Error",

"error_code": error_code,

"message": error_message,

}

),

}

except ValueError as e:

print(f"Validation Error: {str(e)}")

return {

"statusCode": 400,

"body": json.dumps({"error": "Validation Error", "message": str(e)}),

}

except Exception as e:

print(f"Unexpected error: {str(e)}")

return {

"statusCode": 500,

"body": json.dumps({"error": "Internal Server Error", "message": str(e)}),

}Prompt Engineering

One important part that should not be forgotten is prompt engineering. The prompt needs to be clear and include detailed instructions on how the LLM should act. I needed to iterate the prompt several times to get the result I needed. My prompt includes specific instructions to:

- Fix spelling and grammatical errors

- Maintain the original markdown formatting

- Preserve technical terminology

- Keep the writing style and tone consistent

- Return only the corrected content without commentary

In the end, the following prompt gave the result I wanted.

You are a professional technical editor and proofreader, expert in AWS and Cloud computing. Please carefully proofread the following markdown blog post for spelling and grammatical errors.

Instructions:

- Fix any spelling mistakes

- Correct grammatical errors

- Maintain the original markdown formatting

- Keep the tone and style consistent

- Do not change the meaning or structure of the content

- Return only the corrected markdown text without any additional commentary

- Do not surround the proofread version with ```markdown

Here is the markdown content to proofread:Starting Voice and Quiz Part

The second part of the flow, generating voice and quiz, runs when a new commit with the commit message "Proofread by Amazon Nova" is added to the pull-request. The action starts when the synchronize event occurs. The first part checks the commit and gets the latest commit message. The steps after that will then check the message.

name: Start Polly Voice and Quiz Flow

on:

pull_request:

types: [synchronize]

paths:

- "**/posts/*.md"

jobs:

check-commit:

runs-on: ubuntu-latest

defaults:

run:

working-directory: ./

outputs:

message: $NaN

steps:

- name: Checkout

uses: actions/checkout@v4

with:

fetch-depth: 0

ref: $

- name: Get last commit message

id: commit-msg

run: |

message=$(git log -1 --pretty=format:"%s" HEAD)

echo "Last commit message: ${message}"

echo "message=${message}" >> $GITHUB_OUTPUT

build:

needs: check-commit

if: contains(needs.check-commit.outputs.message, 'Proofread by Amazon Nova')

runs-on: ubuntu-latest

steps:

- uses: actions/checkout@v4

- name: Install and Build

run: |

npm ci

npm run build

deploy:

needs: build

runs-on: ubuntu-latest

permissions:

id-token: write

contents: read

steps:

- name: Upload build blog to S3

working-directory: ./

run: |

aws s3 sync --delete public/. s3://<My-Staging-Bucket>

aws events put-events --entries '....'Conclusion

Extending my blog with automated proofreading using Amazon Nova has saved me a lot of time. I now do a quick proofreading myself, then delegate the big part to Amazon Nova. The extension fits great into the event-driven design I already had in place.

So, with Bedrock and Amazon Nova, together with a serverless approach, I created and extended my solution that is:

- Reliable: Consistent proofreading quality for every post

- Cost-effective: Pay-per-use model with minimal operational costs

Most importantly, automated proofreading allows me to focus on what I enjoy the most, creating technical content that helps others learn about cloud technologies.

Going forward, I have several other ideas that will enhance my blog using Amazon Nova and AI. Stay tuned for more fun solutions!

Final Words

Don't forget to follow me on LinkedIn and for more content, read the rest of my Blogs.

As Werner says! Now Go Build!

Test what you just learned by doing this five question quiz.

Scan the QR code below or click the link above.

Powered by kvist.ai your AI generated quiz solution!