Serverless voice with Amazon Polly

Hurray!! It's now possible to listen to my blog posts, only available on https://jimmydqv.com at the moment. When I learn new things I often prefer to listen to the text over reading. I have so much easier to pick up new information when I hear it. So for a very long time I have wanted to add voice to blogs, to give everyone the possibility to listen to them.

Goal

I wanted to provide you all with an alternative way of consuming my blog posts. I, personally, like to listen to content. Since that let me do other things, such as driving or exercising at the same time. As mentioned I also tend to pick up information so much easier when I hear it, and therefor I wanted to give you the same option. As an extra benefit it makes my blog more accessible to people with visual impairments or reading difficulties. Finally I find it too be more engaging and interactive experience for you all.

The problem

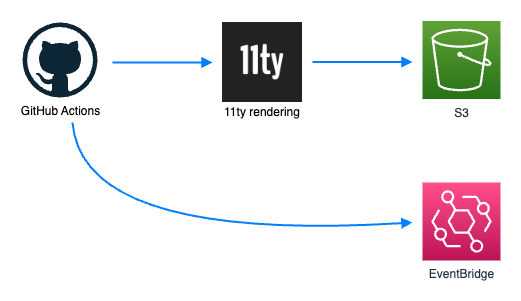

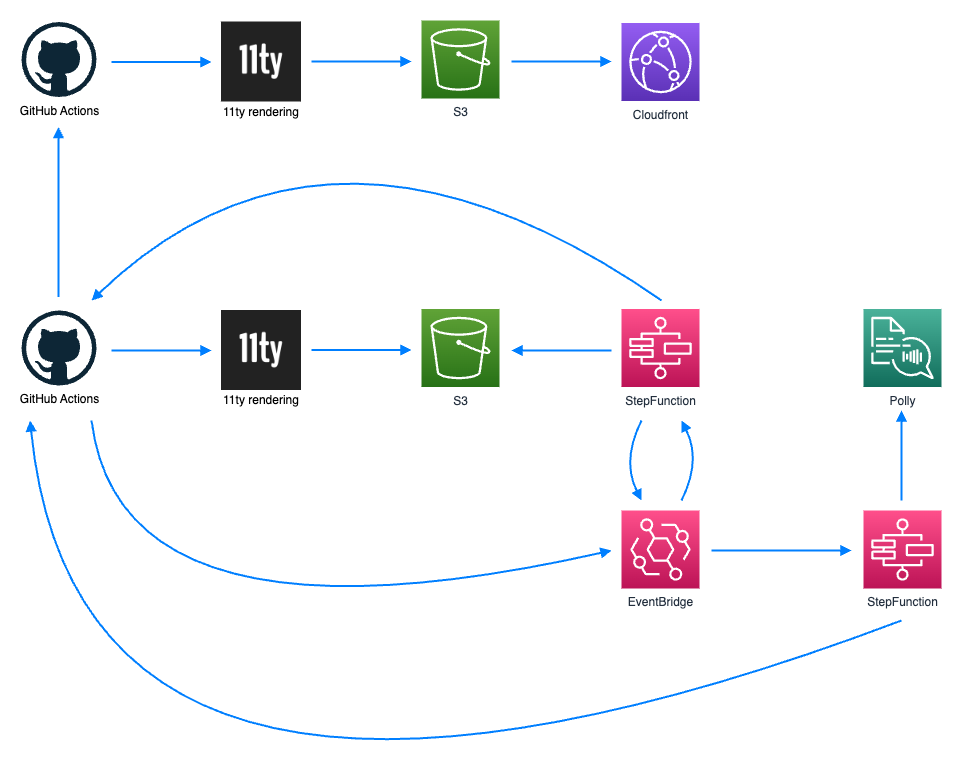

My blog is a static site hosted in S3 and Cloudfront. I write my blogs in markdown and use 11ty too render HTML in a GitHub Actions. The files are then uploaded to the S3 bucket and the post is after that live.

I want the voice of the blog to be based on the actual rendered HTML, so it's as accurate as possible, that mean that I first need 11ty to render the post before I can generate voice for it. The markdown file then need to be updated with the voice audio file and then re-rendered before being pushed to the production S3. The voice needed to be generated as an intermediate step, before going live. If not I would end up in a endless loop where the post get rendered, updated with voice, rendered and so it continues.

AWS Solution and services

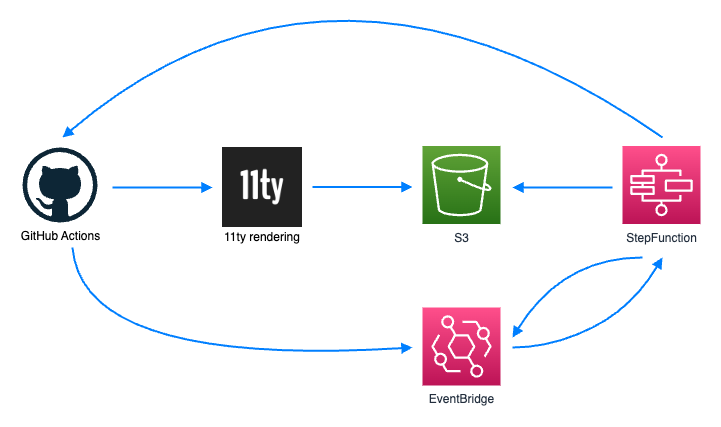

When I have finished writing a blog I create a new pull-request towards my GitHub main branch. I normally inspects the post to ensure it looks as I expect, before merging it to the main branch. As soon as a pull-request has been merged, the GitHub action is invoked to render and upload the post to the production environment. Part of the solution is to invoke a new GitHub action when the pull-request is opened. The new action will render the post and upload it to a development bucket in S3, it will also post a message to an Amazon EventBridge event-bus with information about the pull-request.

Now this is only the first part if the solution. Next an Amazon StepFunction will be invoked by the message added to EventBridge by the GitHub action. This StepFunction will now process the information about the pull-request. I'm using Octokit in all calls to GitHub. The below code show how i get information about the pull-request, where the pull-request number is comes from the event.

import { Octokit } from "octokit";

const initializeOctokit = async () => {

if (!octokit) {

const gitHubSecret =

"ACCESS_TOKEN";

octokit = new Octokit({ auth: gitHubSecret });

}

};

const getPullRequest = async (pullRequestNumber) => {

if (octokit) {

const result = await octokit.rest.pulls.get({

owner: process.env.OWNER,

repo: process.env.REPO,

pull_number: pullRequestNumber,

});

return result.data

}

};

The StepFunction will extract information about the post and use that to lookup the rendered post in the development S3 bucket. When all information about both the pull-request and the rendered post has been parsed a new message will be added onto EventBridge with this information.

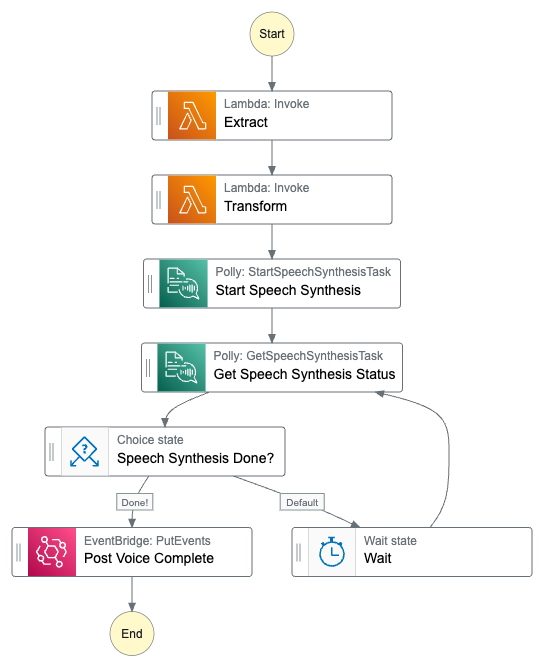

We are closing in on the solution, but there are still several steps left before we have properly rendered voice. The message, posted by our previous StepFunction, is now picked up by a voice rendering pipeline. This StepFunction will parse the full html file, extract only the parts needed to render the voice, and export this as an XML document. From this XML document a proper SSML document is generated that can be used by Amazon Polly to generate the voice. The stepFunction now used an SDK integration towards Amazon Polly that uses the SSML file to generate the voice as an mp3 audio file that is stored in S3. In the below state machine you can see the flow, it also shows that I primarily use SDK integrations, just to write less code. I wait in a simle loop where I fetch the status of the Polly voice rendering, if it's still in progress I use the wait state to wait for 5 seconds. When the voice rendering is finished, either success or failure, a message is posted onto EventBridge.

This is some of the code I use to extract and convert the data from HTML to XML.

def extract(contents):

data = "<jXML>"

soup = BeautifulSoup(contents, "lxml")

links = soup.find_all("a")

for child in links:

child.replace_with(child.text)

for child in soup.article.descendants:

if is_image(child):

data = data + f"<p>{child['alt']}</p>"

elif keep_tag(child):

data = data + str(child)

data = data + "</jXML>"

return data

def keep_tag(tag):

tagsToKeep = ["p", "h2", "h3", "h4"]

if tag.name in tagsToKeep and not has_image_only(tag):

return True

return False

And then converting from XML to SSML.

def convert_to_ssml(contents):

ssml = "<speak>"

root = ElementTree.fromstring(contents)

for child in root:

if child.tag == "p":

ssml = ssml + ElementTree.tostring(child, encoding="unicode")

elif child.tag == "h2":

ssml = ssml + '<break strength="x-strong"/>'

ssml = ssml + child.text

ssml = ssml + "</speak>"

return ssml

Almost there! Voice has now been generated and we are in the final part of the solution. I now need to modify the markdown file, and update the pull-request with the audio file and edited markdown file.

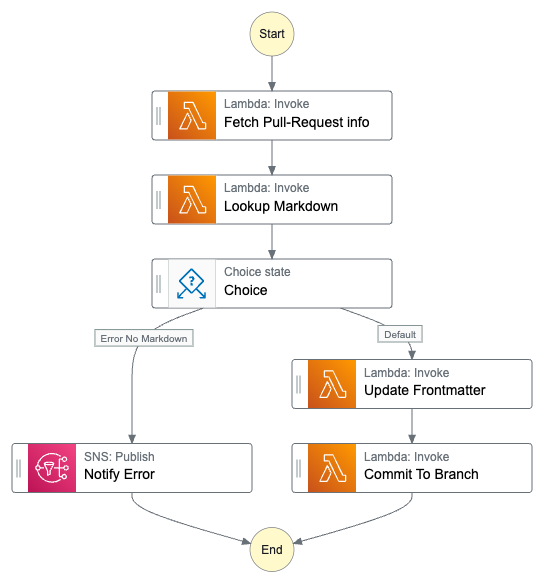

This pipeline is invoked by a message, that the last step in the voice rendering pipeline added to the EventBridge event-bus. Information about the pull-request will be fetched from Github, using Octokit. When I edit the markdown I just add a new data in the Front Matter section with information about the location for the audio file. By adding it to Front Matter the rendering process will add the standard Audio-tag to the post. Finally a new commit is created which I then commit back to the development branch. This will update the pull-request with all the new data.

This is some of the code I use to add the commit.

import { Octokit } from "octokit";

const createNewTree = async (blobs, paths, parentTreeSha) => {

const tree = blobs.map(({ sha }, index) => ({

path: paths[index],

mode: `100644`,

type: `blob`,

sha,

}));

const { data } = await octokit.rest.git.createTree({

owner: process.env.OWNER,

repo: process.env.REPO,

tree,

base_tree: parentTreeSha,

});

return data;

};

const createNewCommit = async (message, currentTreeSha, currentCommitSha) =>

(

await octokit.rest.git.createCommit({

owner: process.env.OWNER,

repo: process.env.REPO,

message,

tree: currentTreeSha,

parents: [currentCommitSha],

})

).data;

const setBranchToCommit = (commitSha) =>

octokit.rest.git.updateRef({

owner: process.env.OWNER,

repo: process.env.REPO,

ref: "heads/post-polly-reads-the-blog",

sha: commitSha,

});

Now we have a complete automatic solution that will generate voice and add it to my posts. The pipeline starts when I create a new pull-request and run fully automatic till the end, where I as before inspects the pull-request before merging to the main branch. This became a very nice and smooth solution to a goal I have had for a long time.

Next step

What are the next steps in this project. I have a couple of main goals. I would like my blogs to be available in more languages, such Spanish or French. My plan is to utilize Amazon Translate to do some basic machine translations that I can publish. Since dev.to doesn't support the basic audio tag, I need to find a different way to provide the voice on these posts. There are some options like creating a Youtube video. Final step would to automatically cross-post all my blogs automatically to dev.to instead of doing that manually today. And of course after some cleanup release the entire pipeline as open source.

As you see there are still things to do and there will more posts about this as I progress with development,

Final Words

This was a really fun project where I got to play around with some of the AI services from AWS. It's really great to see how much you can accomplish with very little code and effort. Stay tuned for more.

Don't forget to follow me on LinkedIn and Twitter for more content, and read rest of my Blogs