Building a serverless file manager

In my previous post Serverless and event-driven design thinking I used a fictitious service, serverless file-manager, as example. In the post we only scratched the surface around the design and building of said service.

In this post we will go a bit deeper in the design and actually build the service using an serverless and event-driven architecture.

File manager service overview

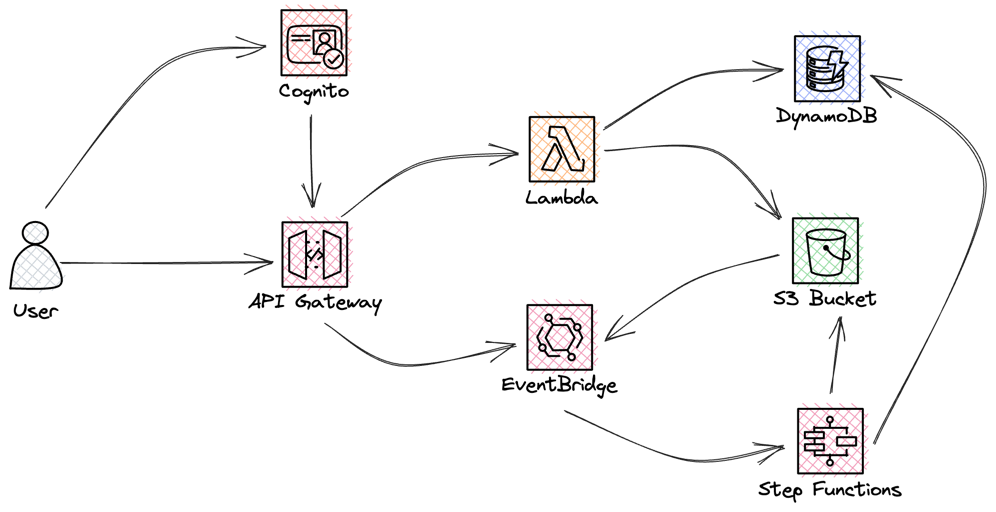

The service will store files in S3 and keep a record of all the files in a DynamoDB table, each file belongs to a specific user. We also need to keep track of each users total storage size. The system overview would look like this, with and API exposing functionality to the user and then carry out the work in a serverless and event-driven way.

Required infrastructure

To start with we should create some of the common infrastructure components that are needed in this service. We need an S3 bucket, for storing files, a custom Eventbridge event-bus to act as event-broker. We will be using both a custom event-bus and the default event-bus. More on that design further down. On the S3 bucket we need to EventBridge notifications. We will be using an ApiGateway HTTP API as the front door that clients will interact with.

EventBridge:

Type: AWS::Events::EventBus

Properties:

Name: !Sub ${Application}-eventbus

FileBucket:

Type: AWS::S3::Bucket

Properties:

BucketEncryption:

ServerSideEncryptionConfiguration:

- ServerSideEncryptionByDefault:

SSEAlgorithm: AES256

NotificationConfiguration:

EventBridgeConfiguration:

EventBridgeEnabled: True

BucketName: !Sub ${Application}-files

HttpApi:

Type: AWS::Serverless::HttpApi

Properties:

DefinitionBody:

Fn::Transform:

Name: AWS::Include

Parameters:

Location: ./api.yaml

Inventory table design

The design of the inventory DynamoDB table is fairly easy, so let's just have a quick look at it. I used a single table design where all different kind of data will live in the same table. We need a record of a users all files and a users total storage. We will be using a composite primary key consisting of a partition and sort key. Since I opted in for a single table design I will name the partition key PK and the sort key SK. That way I can store different data without being confused by the attribute name.

InventoryTable:

Type: AWS::DynamoDB::Table

Properties:

TableName: !Sub ${Application}-file-inventory

BillingMode: PAY_PER_REQUEST

AttributeDefinitions:

- AttributeName: PK

AttributeType: S

- AttributeName: SK

AttributeType: S

KeySchema:

- AttributeName: PK

KeyType: HASH

- AttributeName: SK

KeyType: RANGEWhen data is stored PK will be username and SK will be the full file path in case of a file. In case of the total storage the SK will have the static value 'Quota'.

If you want to learn more about DynamoDB and single table design I would recommend The DynamoDB book by Alex DeBrie

Cloud design

As I mentioned last time I normally use a simplified version of EventStorming when starting the design. During that process four different flows was identified: Put (Create and Update), Fetch, List, and Delete. The Fetch and List flows will actually be synchronous request-response design, Put will contain both a synchronous request-response and asynchronous event design, and Delete will be a asynchronous event design. In the event design we will be using both commands and events.

Let's now look at each of the flows and how to implement them.

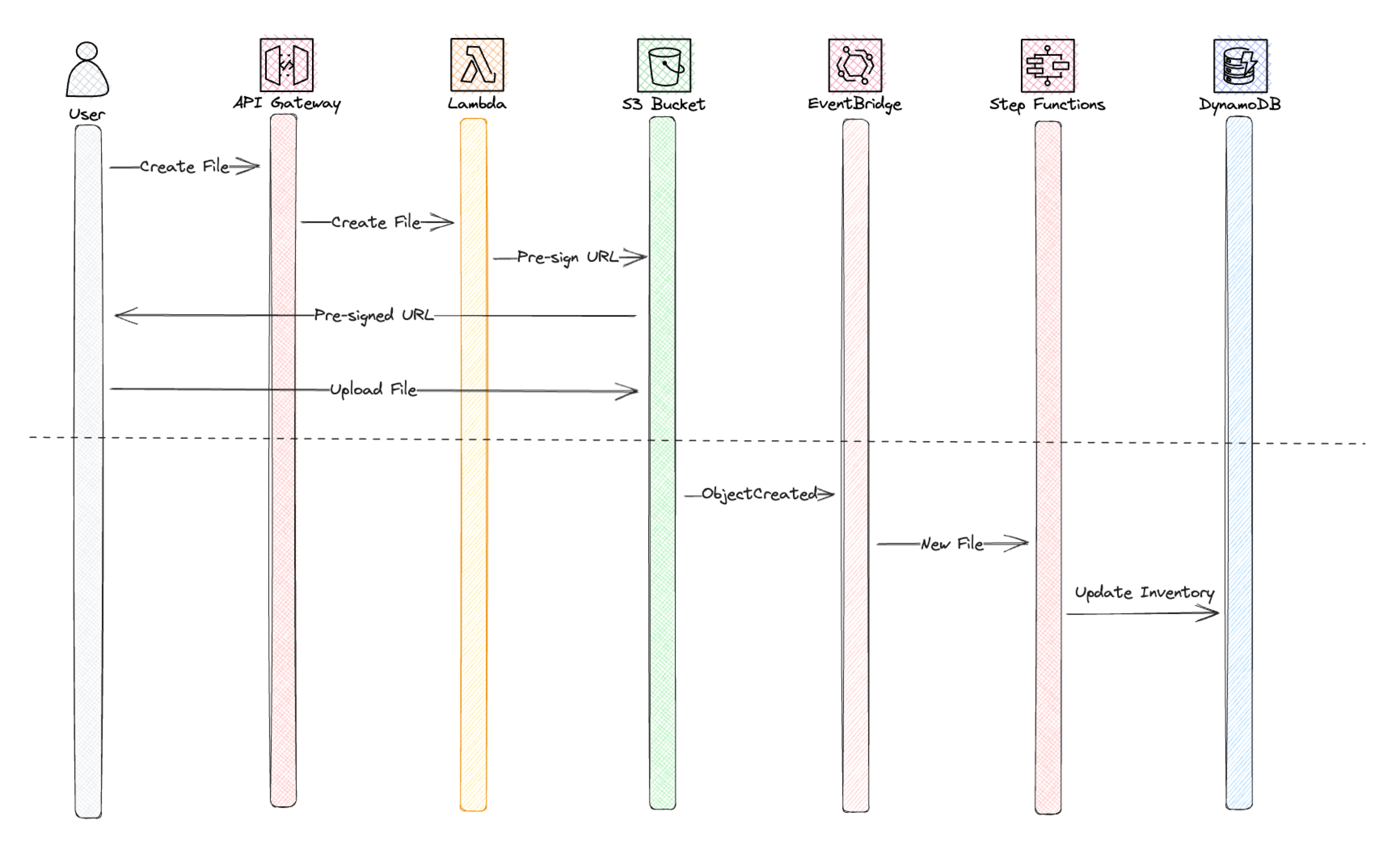

Put (Create and Update)

The put-flow, or create and update, is initiated by a client calling the front door API. The API will then create a pre-signed S3 url that the client can use to upload the file. This part is a classic request-response model. What is the reason for using pre-signed S3 URL that the client upload to? Why not just post the file directly over the API? The primary reason is the Amazon API gateway payload quota. The max payload size is 10mb and to support all kinds of files this will become a limitation. The second reason would be that the backend logic can create a structure in S3 and organize files in the structure that is preferred.

When the client then uploads the file to S3 this will generate an event, the part below the dashed line in the image, this will invoke a StepFunction that will update the file inventory and also update the the users total storage. We will dive deep into this StepFunction a bit further down.

We need to hook up an Lambda function to our API Gateway and implement our logic to pre-sign the S3 url.

CreateFunction:

Type: AWS::Serverless::Function

Properties:

CodeUri: Api/Create

Handler: create.handler

Policies:

- S3CrudPolicy:

BucketName: !Ref FileBucket

- DynamoDBReadPolicy:

TableName: !Ref InventoryTable

Environment:

Variables:

BUCKET: !Ref FileBucket

Events:

Create:

Type: HttpApi

Properties:

Path: /files/create

Method: post

ApiId: !Ref HttpApi

import json

import boto3

import os

def handler(event, context):

body = json.loads(event["body"])

bucket = os.environ["BUCKET"]

if not body["path"].endswith("/") and len(body["path"]) > 0:

body["path"] = body["path"] + "/"

fullpath = body["user"] + "/" + body["path"] + body["name"]

client = boto3.client("s3")

response = client.generate_presigned_url(

"put_object",

Params={"Bucket": bucket, "Key": fullpath},

ExpiresIn=600,

)

return {"url": response}

Fetch

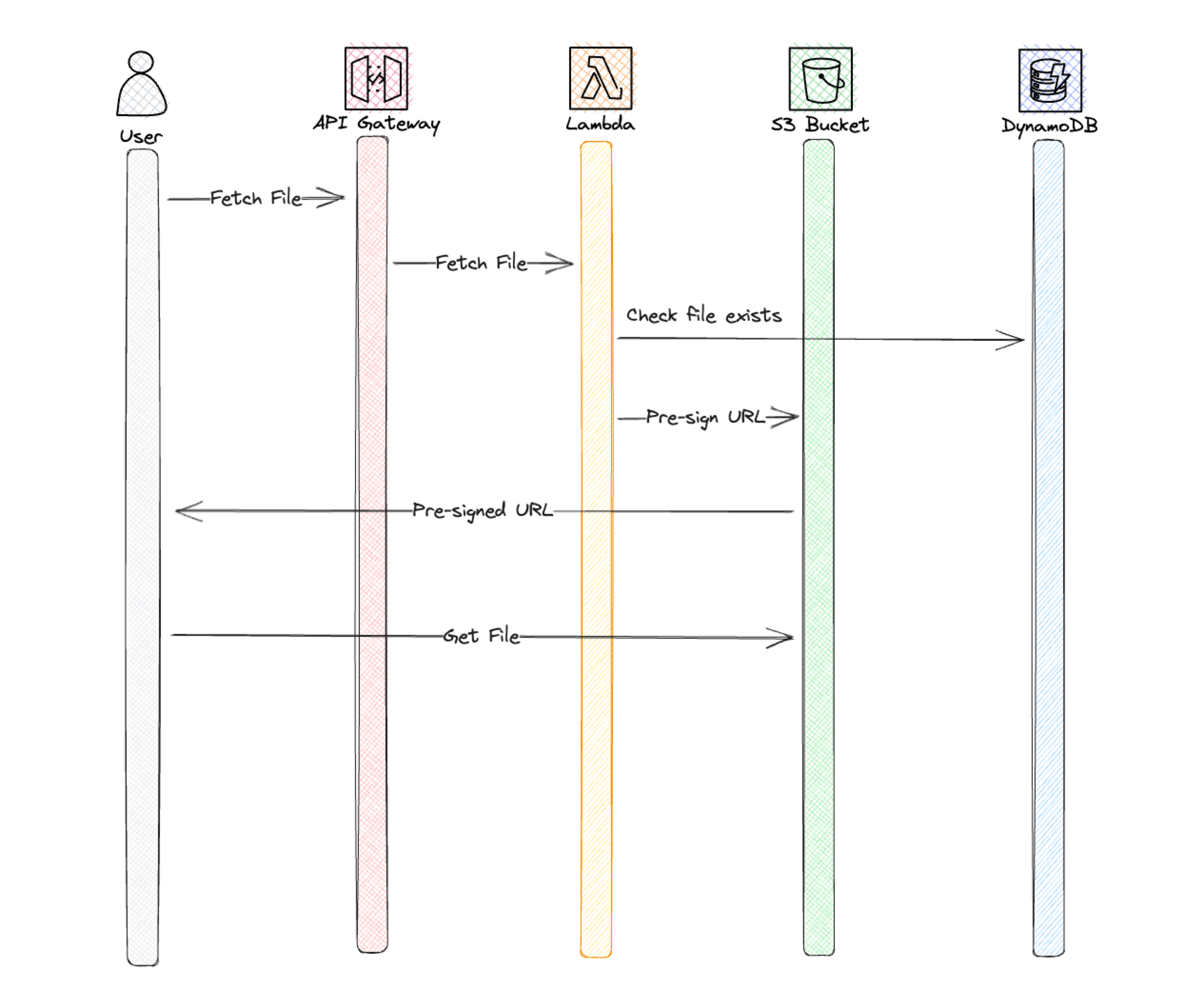

Now let's look at the Fetch flow, basically downloading a file. This is implemented as 100% request-response as the client need the response with the file back. It's not possible to do this in an event-driven way. Or it would be if you instead send the user a link by e-mail to download the file. That is a good approach if there need to be some form of processing done before downloading.

Once again the API will return a pre-signed S3 url that the client can use to download the file. A different approach to that would to use the new Lambda streaming response and just use a Lambda Function URL with CloudFront. But now we use API Gateway so let's stick to the pre-signed url pattern.

So let's create the Lambda Function and logic that will handle this flow.

FetchFunction:

Type: AWS::Serverless::Function

Properties:

CodeUri: Api/Fetch

Handler: fetch.handler

Policies:

- S3CrudPolicy:

BucketName: !Ref FileBucket

Environment:

Variables:

BUCKET: !Ref FileBucket

INVENTORY_TABLE: !Ref InventoryTable

Events:

Fetch:

Type: HttpApi

Properties:

Path: /files/fetch

Method: post

ApiId: !Ref HttpApiimport json

import boto3

import os

def handler(event, context):

body = json.loads(event["body"])

bucket = os.environ["BUCKET"]

if not body["path"].endswith("/") and len(body["path"]) > 0:

body["path"] = body["path"] + "/"

fullpath = body["user"] + "/" + body["path"] + body["name"]

if check_file_exists(body["user"], fullpath):

client = boto3.client("s3")

response = client.generate_presigned_url(

"get_object",

Params={"Bucket": bucket, "Key": fullpath},

ExpiresIn=600,

)

return {"url": response}

else:

return {"status": 404, "body": "File not found"}

def check_file_exists(user, path):

table = os.environ["INVENTORY_TABLE"]

dynamodb_client = boto3.client("dynamodb")

response = dynamodb_client.query(

TableName=table,

KeyConditionExpression="PK = :pk AND SK = :sk",

ExpressionAttributeValues={":pk": {"S": user}, ":sk": {"S": path}},

)

return len(response["Items"]) > 0

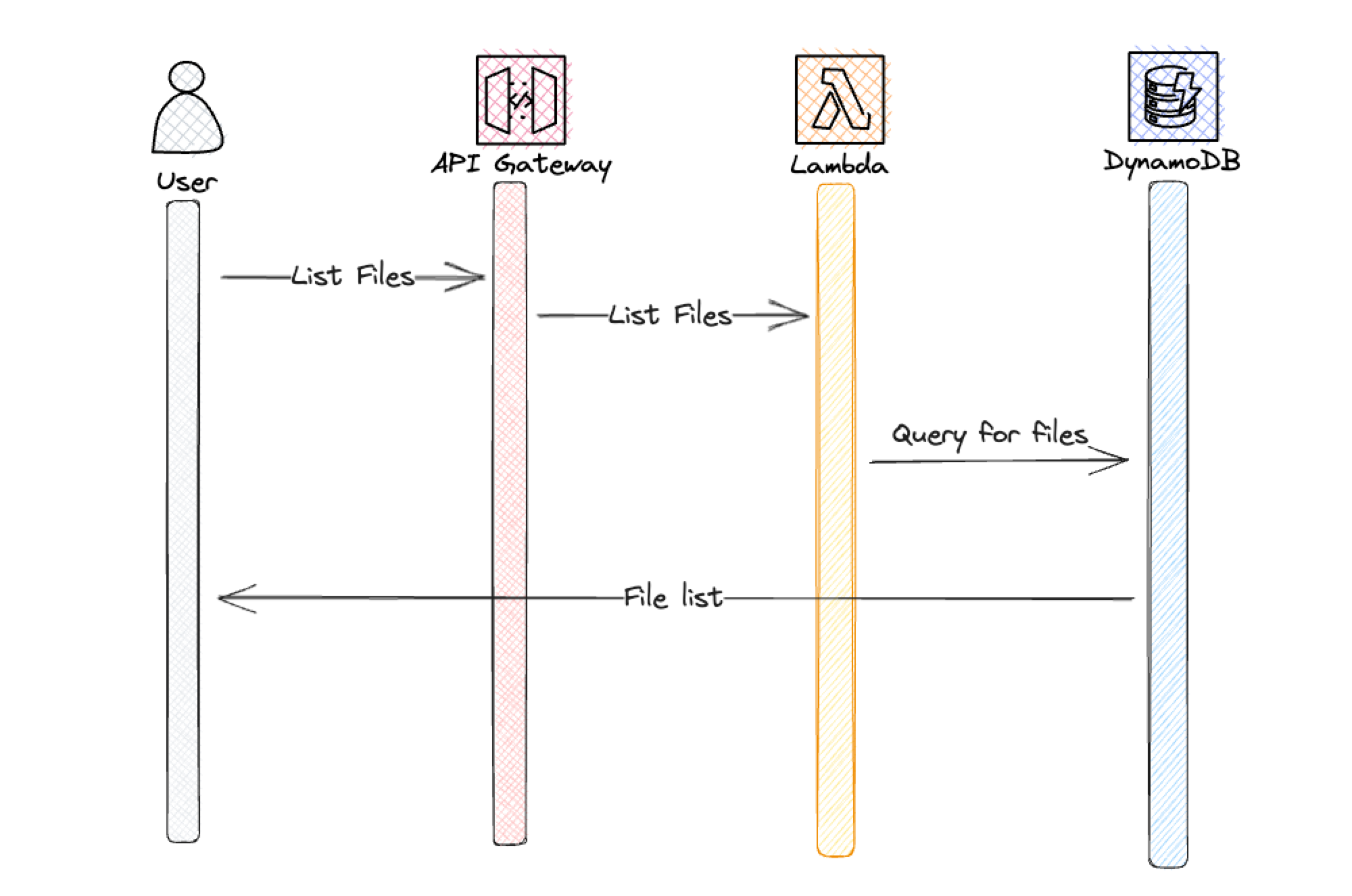

List

Just as the Fetch flow the List flow is also a classical request-response, for the same reason, the client expect to get a list of files back. We will query the file inventory to get the actual list and return it to the client.

So let's create infrastructure and logic that will handle this flow.

ListFunction:

Type: AWS::Serverless::Function

Properties:

CodeUri: Api/List

Handler: list.handler

Policies:

- DynamoDBReadPolicy:

TableName: !Ref InventoryTable

Environment:

Variables:

INVENTORY_TABLE: !Ref InventoryTable

Events:

Fetch:

Type: HttpApi

Properties:

Path: /files/list

Method: post

ApiId: !Ref HttpApiimport json

import boto3

import os

def handler(event, context):

body = json.loads(event["body"])

table = os.environ["INVENTORY_TABLE"]

dynamodb_client = boto3.client("dynamodb")

response = dynamodb_client.query(

TableName=table,

KeyConditionExpression="PK = :user AND begins_with ( SK , :filter )",

ExpressionAttributeValues={

":user": {"S": body["user"]},

":filter": {"S": f'{body["user"]}/{body["filter"]}'},

},

)

file_list = []

for item in response["Items"]:

file = {

"Path": item["Path"]["S"].replace(f'{body["user"]}/', ""),

"Size": item["Size"]["N"],

"Etag": item["Etag"]["S"],

}

file_list.append(file)

return file_listDelete

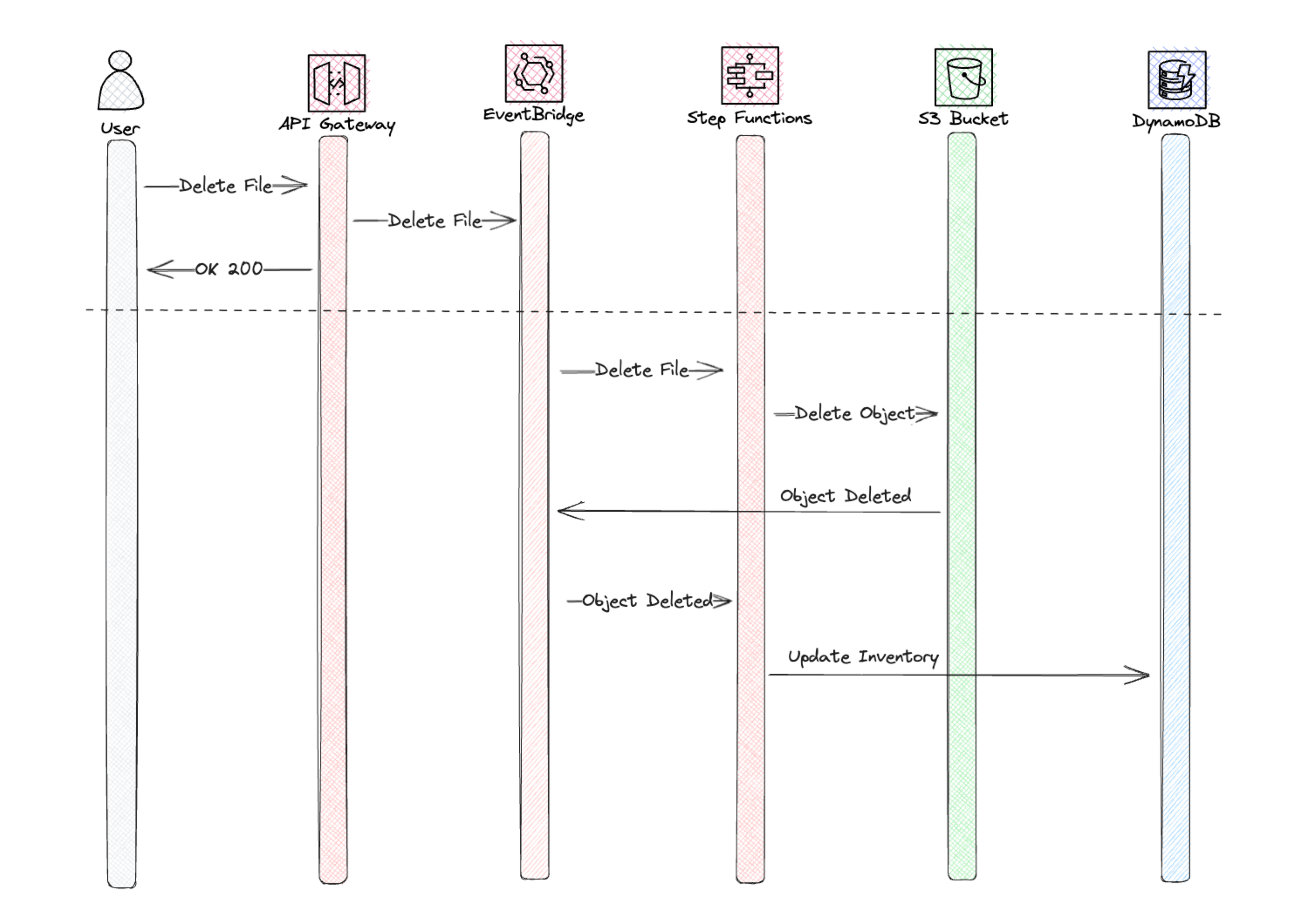

In the delete flow we are starting to touch on a fully event-driven flow. In this flow the delete command will be directly added onto an EventBridge custom event-bus, we use the storage-first pattern. Directly after adding the command to the bus API Gateway will return a HTTP 200, Status OK, to the client. All the work is then carried out in an asynchronous manner.

We create an Open API specification to setup the direct integration between ApiGateway and EventBridge. When doing that we at the same time need to give ApiGateway permissions to call Eventbridge.

openapi: 3.0.1

info:

title: File Management API

paths:

/files/delete:

post:

responses:

default:

description: Send delete command to EventBridge

x-amazon-apigateway-integration:

integrationSubtype: EventBridge-PutEvents

credentials:

Fn::GetAtt: [ApiRole, Arn]

requestParameters:

Detail: $request.body

DetailType: DeleteFile

Source: FileApi

EventBusName:

Fn::GetAtt: [EventBridge, Name]

payloadFormatVersion: 1.0

type: aws_proxy

connectionType: INTERNET

x-amazon-apigateway-importexport-version: 1.0 ApiRole:

Type: AWS::IAM::Role

Properties:

AssumeRolePolicyDocument:

Version: 2012-10-17

Statement:

- Effect: Allow

Principal:

Service: apigateway.amazonaws.com

Action:

- sts:AssumeRole

Policies:

- PolicyName: ApiDirectWriteEventBridge

PolicyDocument:

Version: 2012-10-17

Statement:

Action:

- events:PutEvents

Effect: Allow

Resource:

- !Sub arn:aws:events:${AWS::Region}:${AWS::AccountId}:event-bus/${EventBridge}As shown in the flow chart, let's use a StepFunction to delete the file from S3. By doing that, we can actually delete the file without writing a single line of code, instead we use the StepFunction built in support calling AWS services.

DeleteStateMachineStandard:

Type: AWS::Serverless::StateMachine

Properties:

DefinitionUri: Events/Delete/StateMachine/delete.asl.yaml

Tracing:

Enabled: true

DefinitionSubstitutions:

InventoryTable: !Ref InventoryTable

FileBucket: !Ref FileBucket

Policies:

- Statement:

- Effect: Allow

Action:

- logs:*

Resource: "*"

- S3CrudPolicy:

BucketName: !Ref FileBucket

- DynamoDBCrudPolicy:

TableName: !Ref InventoryTable

Events:

Delete:

Type: EventBridgeRule

Properties:

EventBusName: !Ref EventBridge

InputPath: $.detail

Pattern:

source:

- FileApi

detail-type:

- DeleteFileComment: Delete File

StartAt: Get Path Length

States:

Get Path Length:

Type: Pass

Parameters:

user.$: $.user

path.$: $.path

name.$: $.name

length.$: States.ArrayLength(States.StringSplit($.path,'/'))

Next: Does path need an update?

Does path need an update?:

Type: Choice

Choices:

- And:

- Not:

Variable: $.path

StringMatches: "*/"

- Variable: $.length

NumericGreaterThan: 0

Next: Update Path

Default: Create Full Path

Update Path:

Type: Pass

Parameters:

user.$: $.user

path.$: States.Format('{}/',$.path)

name.$: $.name

Next: Create Full Path

Create Full Path:

Type: Pass

Parameters:

User.$: $.user

Path.$: $.path

Name.$: $.name

FullPath.$: States.Format('{}/{}{}',$.user,$.path,$.name)

Next: Find Item

Find Item:

Type: Task

Next: File Exists?

Parameters:

TableName: ${InventoryTable}

KeyConditionExpression: PK = :pk AND SK = :sk

ExpressionAttributeValues:

":pk":

S.$: $.User

":sk":

S.$: $.FullPath

ResultPath: $.ItemQueryResult

Resource: arn:aws:states:::aws-sdk:dynamodb:query

File Exists?:

Type: Choice

Choices:

- Variable: $.ItemQueryResult.Count

NumericGreaterThan: 0

Comment: Delete File

Next: DeleteObject

Default: File Not Found

DeleteObject:

Type: Task

Parameters:

Bucket: ${FileBucket}

Key.$: $.FullPath

Resource: arn:aws:states:::aws-sdk:s3:deleteObject

Next: The End

File Not Found:

Type: Pass

Next: The End

The End:

Type: Pass

End: trueUpdate inventory

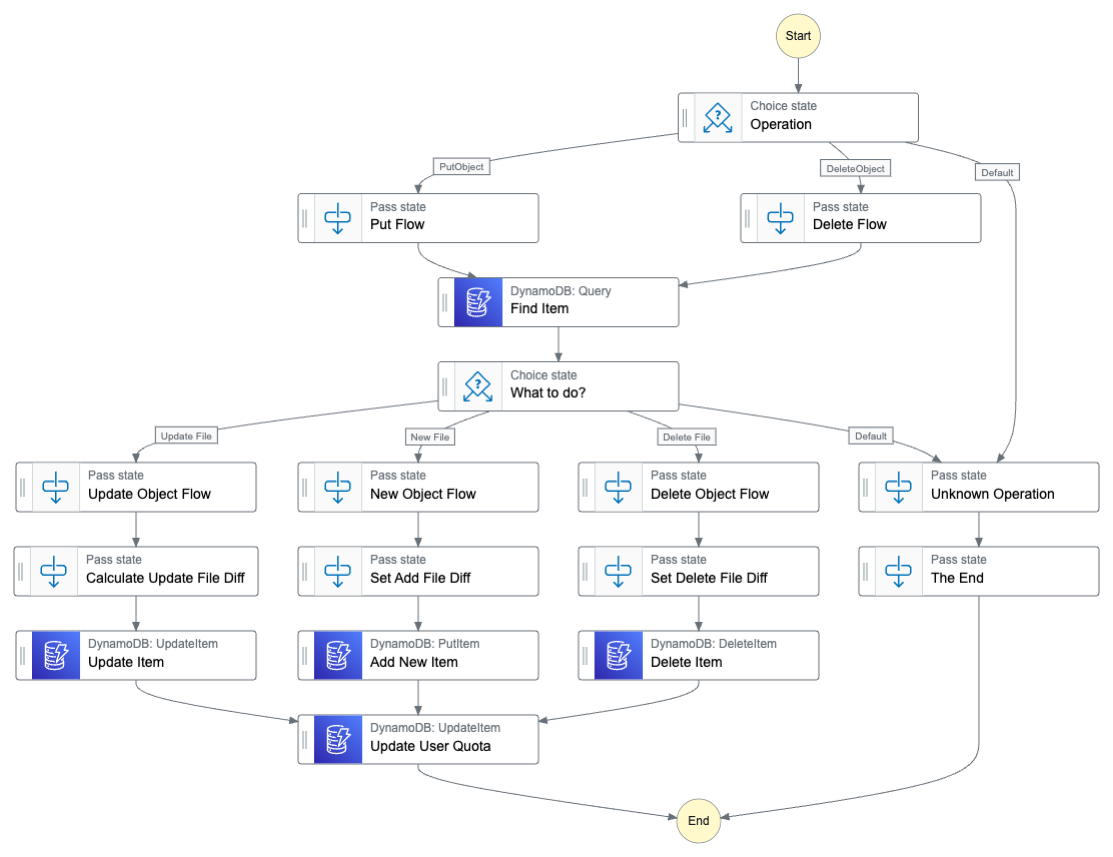

The update inventory StepFunction is invoked by the default event-bus, when files are added, updated, or deleted from S3. This functionality is responsible for keeping a correct inventory of all files and to keep track of how much storage space a user has allocated. It will be run in an asynchronous and event-driven way, one thing to remember is that the inventory become eventually consistent. So a list directly after a put might not return the newly added file.

As seen we use a choice state to determine either Put or Delete path in the flow and also if it's a new or updated file. This way it's possible to isolate the logic to different flows, making updating and debugging easier. Throughout the entire flow I only use service or SDK integration, and StepFunctions built in intrinsic functions. Not a single Lambda function is used, StepFunctions is a very powerful low-code service.

Comment: Update File Inventory

StartAt: Operation

States:

Operation:

Type: Choice

Choices:

- Variable: $.reason

StringEquals: PutObject

Next: Put Flow

Comment: PutObject

- Variable: $.reason

StringEquals: DeleteObject

Next: Delete Flow

Comment: DeleteObject

Default: Unknown Operation

Put Flow:

Type: Pass

Parameters:

User.$: States.ArrayGetItem(States.StringSplit($.object.key,'/'),0)

Bucket.$: $.bucket.name

Path.$: $.object.key

Size.$: $.object.size

Etag.$: $.object.etag

Reason.$: $.reason

ResultPath: $

Next: Find Item

Find Item:

Type: Task

Next: What to do?

Parameters:

TableName: ${InventoryTable}

KeyConditionExpression: PK = :pk AND SK = :sk

ExpressionAttributeValues:

":pk":

S.$: $.User

":sk":

S.$: $.Path

ResultPath: $.ItemQueryResult

Resource: arn:aws:states:::aws-sdk:dynamodb:query

What to do?:

Type: Choice

Choices:

- And:

- Variable: $.ItemQueryResult.Count

NumericGreaterThan: 0

- Not:

Variable: $.Reason

StringMatches: DeleteObject

Comment: Update File

Next: Update Object Flow

- And:

- Variable: $.ItemQueryResult.Count

NumericLessThanEquals: 0

- Not:

Variable: $.Reason

StringMatches: DeleteObject

Next: New Object Flow

Comment: New File

- And:

- Variable: $.Reason

StringMatches: DeleteObject

- Variable: $.ItemQueryResult.Count

NumericGreaterThan: 0

Next: Delete Object Flow

Comment: Delete File

Default: Unknown Operation

Delete Object Flow:

Type: Pass

Parameters:

User.$: $.User

Bucket.$: $.Bucket

Path.$: $.Path

Reason.$: $.Reason

DeletionType.$: $.DeletionType

Count.$: $.ItemQueryResult.Count

OldItemSize.$: $.ItemQueryResult.Items[0].Size.N

OldItemEtag.$: $.ItemQueryResult.Items[0].Etag.S

OldItemUser.$: $.ItemQueryResult.Items[0].User.S

OldItemPath.$: $.ItemQueryResult.Items[0].Path.S

OldItemBucket.$: $.ItemQueryResult.Items[0].Bucket.S

ResultPath: $

Next: Set Delete File Diff

Set Delete File Diff:

Type: Pass

Parameters:

Size.$: States.Format('-{}',$.OldItemSize)

ResultPath: $.Diff

Next: Delete Item

Delete Item:

Type: Task

Resource: arn:aws:states:::dynamodb:deleteItem

Parameters:

TableName: ${InventoryTable}

Key:

PK:

S.$: $.User

SK:

S.$: $.Path

ResultPath: null

Next: Update User Quota

Update Object Flow:

Type: Pass

Parameters:

User.$: $.User

Bucket.$: $.Bucket

Path.$: $.Path

Size.$: $.Size

Etag.$: $.Etag

Reason.$: $.Reason

Count.$: $.ItemQueryResult.Count

OldItemSize.$: $.ItemQueryResult.Items[0].Size.N

OldItemEtag.$: $.ItemQueryResult.Items[0].Etag.S

OldItemUser.$: $.ItemQueryResult.Items[0].User.S

OldItemPath.$: $.ItemQueryResult.Items[0].Path.S

OldItemBucket.$: $.ItemQueryResult.Items[0].Bucket.S

ResultPath: $

Next: Calculate Update File Diff

Calculate Update File Diff:

Type: Pass

Parameters:

Size.$: >-

States.MathAdd(States.StringToJson(States.Format('{}',$.Size)),

States.StringToJson(States.Format('-{}', $.OldItemSize)))

ResultPath: $.Diff

Next: Update Item

Update Item:

Type: Task

Resource: arn:aws:states:::dynamodb:updateItem

Parameters:

TableName: ${InventoryTable}

Key:

PK:

S.$: $.User

SK:

S.$: $.Path

UpdateExpression: SET Size = :size, Etag = :etag

ExpressionAttributeValues:

":size":

N.$: States.Format('{}', $.Size)

":etag":

S.$: $.Etag

ResultPath: null

Next: Update User Quota

Update User Quota:

Type: Task

Resource: arn:aws:states:::dynamodb:updateItem

ResultPath: null

Parameters:

TableName: ${InventoryTable}

Key:

PK:

S.$: $.User

SK:

S: Quota

UpdateExpression: ADD TotalSize :size

ExpressionAttributeValues:

":size":

N.$: States.Format('{}', $.Diff.Size)

End: true

Unknown Operation:

Type: Pass

Next: The End

Delete Flow:

Type: Pass

Parameters:

User.$: States.ArrayGetItem(States.StringSplit($.object.key,'/'),0)

Bucket.$: $.bucket.name

Path.$: $.object.key

Reason.$: $.reason

DeletionType: $.deletion-type

Next: Find Item

The End:

Type: Pass

End: true

New Object Flow:

Type: Pass

Next: Set Add File Diff

Set Add File Diff:

Type: Pass

Parameters:

Size.$: $.Size

ResultPath: $.Diff

Next: Add New Item

Add New Item:

Type: Task

Resource: arn:aws:states:::dynamodb:putItem

Parameters:

TableName: ${InventoryTable}

Item:

PK:

S.$: $.User

SK:

S.$: $.Path

User:

S.$: $.User

Path:

S.$: $.Path

Bucket:

S.$: $.Bucket

Size:

N.$: States.Format('{}',$.Size)

Etag:

S.$: $.Etag

ResultPath: null

Next: Update User QuotaPatterns and best-practices used

In the design I used a couple of common patterns, let's take a look at them.

Event-bus design

Since we rely on events from S3 we must use the default event-bus for that. All AWS services send their events to the default bus. We post our application specific events to an custom event-bus. When using EventBridge as your event broker this is best practice, we leave the default bus to AWS service events and use one or more custom buses for our application events.

Storage-first

Always aim to use the storage-first pattern. This will ensure that data and messages are not lost. You can read more about storage-first in my post Serverless patterns

CRUD pattern

The CRUD pattern is primarily used in the Update Inventory StepFunction, and by utilizing the powerful choice state we can route different operations down different paths. This will give an easy to overview method handling, it will also be so much easier to debug in case of a failure.

You can read more details in my previous blog. Or you can watch James Beswick talk about building serverless espresso at re:Invent

Use JSON

Always create your commands and events in JSON. Why? JSON is widely supported by AWS services, SQS, SNS, EventBridge can apply powerful logic based on JSON formatted data. Don't use yaml, Protobuf, xml, or any other format.

Final Words

This was a quick walkthrough of the service used in my blog around Serverless and event-driven design thinking. You can get the entire source code from Github. The source code for the entire setup is available in my GitHub repo, Serverless File Manager

Don't forget to follow me on LinkedIn and Twitter for more content, and read rest of my Blogs